Brad Hedlund recently posted an excellent blog on Network Virtualization. Network Virtualization is the label used by Brad’s employer VMware/Nicira for their implementation of SDN. Brad’s article does a great job of outlining the need for changes in networking in order to support current and evolving application deployment models. He also correctly points out that networking has lagged behind the rest of the data center as technical and operational advancements have been made.

Network configuration today is laughably archaic when compared to storage, compute and even facilities. It is still the domain of CLI wizards hacking away on keyboards to configure individual devices. VMware brought advancements like resource utilization based automatic workload migration to the compute environment. In order to support this behavior on the network an admin must ensure the appropriate configuration is manually defined on each port that workload may access and every port connecting the two. This is time consuming, costly and error prone. Brad is right, this is broken.

Brad also correctly points out that network speeds, feeds and packet delivery are adequately evolving and that the friction lies in configuration, policy and service delivery. These essential network components are still far too static to keep pace with application deployments. The network needs to evolve, and rapidly, in order to catch up with the rest of the data center.

Brad and I do not differ on the problem(s), or better stated: we do not differ on the drivers for change. We do however differ on the solution. Let me preface in advance that Brad and I both work for HW/SW vendors with differing solutions to the problem and differing visions of the future. Feel free to write the rest of this off as mindless dribble or vendor Kool Aid, I ain’t gonna hate you for it.

Brad makes the case that Network Virtualization is equivalent to server virtualization, and from this simple assumption he poses it as the solution to current network problems.

Let’s start at the top: don’t be fooled by emphatic statements such as Brad’s stating that network virtualization is analogous to server virtualization. It is not, this is an apples and oranges discussion. Network virtualization being the orange where you must peel the rind to get to the fruit. Don’t take my word for it, one of Brad’s colleagues, Scott Lowe, a man much smarter then I says it best:

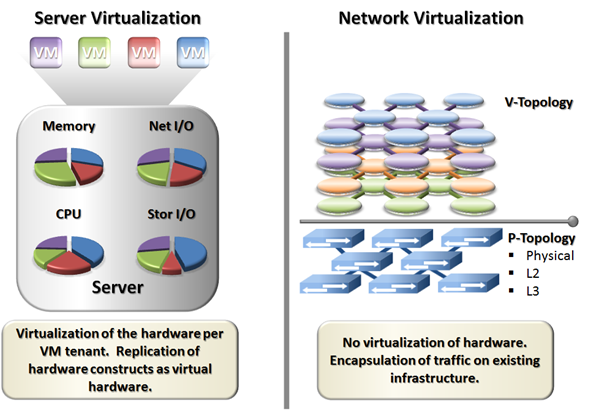

The issue is that these two concepts are implemented in a very different fashion. Where server virtualization provides full visibility and partitioning of the underlying hardware, network virtualization simply provides a packet encapsulation technique for frames on the wire. The diagram below better illustrates our two fruits: apples and oranges.

As the diagram illustrates we are not working with equivalent approaches. Network virtualization would require partitioning of switch CPU, TCAM, ASIC forwarding, bandwidth etc. to be a true apples-to-apples comparison. Instead it provides a simple wrapper to encapsulate traffic on the underlying Layer 3 infrastructure. These are two very different virtualization approaches.

Brad makes his next giant leap in the “What is the Network section.†Here he makes the assumption that the network consists of only virtual workloads “The “network†we want to virtualize is the complete L2-L7 services viewed by the virtual machines†and the rest of his blog focuses there. This is fine for those data center environments that are 100% virtualized including servers, services and WAN connectivity and use server virtualization for all of those purposes. Those environments must also lack PaaS and SaaS systems that aren’t built on virtual servers as those are also non-applicable to the remaining discussion. So anyone in those environments described will benefit from the discussion, anyone <crickets>.

So Brad and, presumably VMware/Nicira (since network virtualization is their term), define the goal as taking “all of the network services, features, and configuration necessary to provision the application’s virtual network (VLANs, VRFs, Firewall rules, Load Balancer pools & VIPs, IPAM, Routing, isolation, multi-tenancy, etc.) – take all of those features, decouple it from the physical network, and move it into a virtualization software layer for the express purpose of automation.†So if your looking to build 100% virtualized server environments with no plans to advance up the stack into PaaS, etc. it seems you have found your Huckleberry.

What we really need is not a virtualized network overlay running on top of an L3 infrastructure with no communication or correlation between the two. What we really need is something another guy much smarter than me (Greg Ferro) described:

Abstraction, independence and isolation, that’s the key to moving the network forward. This is not provided by network virtualization. Network virtualization is a coat of paint on the existing building. Further more that coat of paint is applied without stripping, priming, or removing that floral wall paper your grandmother loved. The diagram below is how I think of it.

With a network virtualization solution you’re placing your applications on a house of cards built on a non-isolated infrastructure of legacy design and thinking. Without modifying the underlying infrastructure, network virtualization solutions are only as good as the original foundation. Of course you could replace the data center network with a non-blocking fabric and apply QoS consistently across that underlying fabric (most likely manually) as Brad Hedlund suggests below.

If this is the route you take, to rebuild the foundation before applying network virtualization paint, is network virtualization still the color you want? If a refresh and reconfigure is required anyway, is this the best method for doing so?

The network has become complex and unmanageable due to things far older than VMware and server virtualization. We’ve clung to device centric CLI configuration and the realm of keyboard wizards. Furthermore we’ve bastardized originally abstracted network constructs such as VLAN, VRF, addressing, routing, and security tying them together and creating a Frankenstein of a data center network. Are we surprised the villagers are coming with torches and pitch forks?

So overall I agree with Brad, the network needs to be fixed. We just differ on the solution, I’d like to see more than a coat of paint. Put lipstick on a pig and all you get is a pretty pig.

Hello Joe

don’t want to turn this post into a my kool-aid vs yours discussion.. useless. Here’s a few things that i suspect won’t change your position but might have people thinking a bit further.

The “service at the edge running decoupled from a core transport” has been running for close to 15 years very reliably in all carrier networks and ISPs around the world in the form of MPLS. This is the same model applied to the DC fabric with a hierarchy of network elements and a distributed service gateway function providing the decoupling.

While you take a shortcut in your apple to oranges comparison (the networking hdw not being in the host it is kinda hard to partition…) you do get the visibility and isolation plus the abstraction of a L2 switch. Furthermore, I would argue that technologies like VRF or VPC today provides no hardware partitioning / visibility / abstraction the way you are describing it despite sitting on the physical network element… and we go our merry way building the largest networks in the world with these technologies.

Same comments about the need for QoS visibility…. as needed as TE.

Your deck of card analogy is correct about the need for laying a solid foundation in order to deliver services but it is incorrect to conclude that this cannot be achieved from the edge as a decoupled service… unless you also claim MPLS is a failed attempt at network virtualization.

Cordially. BG.

B.,

First off, thanks for reading and responding, I always appreciate the discussion and more often than not I learn something new.

To start off I don’t see MPLS as a good analogy to network virtualization. MPLS is coupled to hardware, one of the main reasons it’s not being utilized heavily as a data center overlay technology.

I definitely didn’t take any short cuts on the analogy and I’m not arguing the difficulty of providing true network virtualization analogous to server virtualization. I am merely stating that they are not analogous because, as you state, network switching would need to reside 100% in the hypervisor or the hypervisor would have to exist on the switches tied to the hardware. Your point validates mine.

I don’t argue that vPC and VRF do not partition hardware; they are also not analogous to server virtualization and are not enough for emerging requirements. VDCs on Cisco devices are the closest analogy but they suffer from scale limits, typically based on switch CPU cores. As I said the technologies in use today need to be changed.

QoS and visibility is not provided end-to-end with network visibility. The underlying switch infrastructure must be configured to provide this service end-to-end; this is not managed at the virtual edge in any network virtualization technique I’m currently familiar with. Visibility is also not provided, for instance can NVP provide link utilization information? No. Can an Arista switch provide visibility into multicast replication problems in an NVP overlay? No.

Nowhere in this post do I pose that it is not possible to build a build a solid foundation to support network virtualization. What I do pose is:

A) Serious considerations must go into the L3 and below transport for network virtualization success. This is contrary to NVP marketeering.

B) If a revamp of the underlying L3 is required anyway, is a software overlay really the right goal?

Thanks again for your thoughts,

Joe

Hi Joe,

Network Virtualization works on any IP network, L2 or L3, new or existing. Irrespective of network virtualization, or any other solution for that matter, building a solid foundation is a given. As a software based solution, a customer can decide to deploy network virtualization on their current infrastructure investment, or build new, with any network vendor, Cisco Nexus or otherwise.

Given that, are you taking the position that a customer’s significant investment in Cisco Nexus/UCS — the “existing building” — is not a solid foundation, and instead is a “house of cards”? Based on what you’ve written here, that’s the obvious conclusion.

Cheers,

Brad

Brad,

Thanks for reading and the comment. I agree on the flexibility provided by a software overlay solution as far as deployment options go. I do however differ with the words you put into my mouth in the second paragraph.

My stance is twofold:

A) If you’re looking at network virtualization something must be missing from what your current network offers.

B) If you just paint over the problems with a fresh coat of network virtualization you’ve done nothing more than hide the issue.

Thinking through your comment I will state that the current network paradigm offered by Cisco or any other switching vendor is not sufficient to move the industry forward. As stated in the blog, it’s archaic at best, with static configuration and device centric policy. Arista is ahead of the game here with programmability, while Cisco is looking to catch up with various components of Cisco One. These provide tools to solve portions of the problem.

Again it all boils back to the foundation needing to be built solidly for the end-goal. Layering an encapsulation on top and calling it virtualization doesn’t solve the problems, it just obfuscates them. Customers need to ask themselves what they really want out of the network and what’s required to get there.

Joe

Joe,

You say that Network Virtualization “doesn’t solve the problems, just obfuscates them”, while completely glossing over the specific “problems” you’re referring to. Let’s drill down into some specifics.

For the sake of discussion, problem A) is automating the frequent network configuration tasks to provision workloads (VLANs, VRFs, Firewalls, Load balancing, etc.). And, problem B) is automating the relatively infrequent provisioning of network capacity (physical switches, physical ports, bandwidth, etc.).

For problem A, network virtualization doesn’t “obfuscate”, that’s wholly incorrect. Network Virtualization decouples problem A from the physical network, and moves it into a virtualization software layer where it can be automated in lock step with the workload provisioning (virtual machines).

With problem A decoupled from the physical network, problem B can be solved separately from A, and in a number of different ways using any existing equipment, with a more simplified, infrequently changing configuration. For example, existing Cisco Nexus or Arista switches can automate the relatively infrequent deployment of simplified switch configurations using software tools like Puppet or Chef.

It sounds like you’re trying to solve both problem A and problem B together with custom hardware, keeping them tightly coupled as they have been for the last 30 years. That’s not moving the industry forward. That’s keeping it stuck in the past.

Cheers,

Brad

Brad,

I’m enjoying the discussion as always, so thanks for engaging. Let me start with the fact that I’ve unfortunately not offered any solutions as you suggest in your last paragraph, so I’m not sure where you’re coming from with that broad assumption. I’m not typically a fan of identifying issues without offering a solution but I don’t see an end-game solution on the market today.

As you state I did “gloss over†specific problems, because they were already stated in the blog you’re commenting on. That being said, since we’ve come full circle anyway I will expand.

As a baseline we agree on the two current problems you mention:

A) Automating workload provisioning and change behavior.

B) Automating physical network device provisioning and configuration.

After reading through your comment I do agree that my use of the word “obfuscate†was too broadly applied. As you state Network Virtualization does solve portions of problem A:

 Automation of workload placement on existing subnets.

 Possible integration of network services (FW. Load-Balancer, etc.)

 L2/L3 communication across an underlying L3 infrastructure on available links that are not otherwise blocked by STP.

These are provided to the virtual network, and specifically to the legacy paradigm of App/OS/Hypervisor which is a wrapper for the physical server application deployment model.

You also correctly imply that Network Virtualization does not assist with Problem B. Deployment and configuration of the physical switching infrastructure required to move packets and frames. Here, however, you minimize the requirements on these devices. QoS is one example that plays a big part in the network and must be configured on a device-by-device basis, and must match in order to provide expected results.

QoS will only grow in importance as more disparate workloads are placed into the virtual environments, voice, video, etc. This has the potential to cause complications as more and more applications are spun-up without the need for network infrastructure team involvement, or better put without network infrastructure team visibility.

Puppet and Chef provisioning can definitely assist with some of this but adds a 3rd layer of management and control: physical network, virtual network, and automation tool. This becomes very cumbersome to not only manage but also to troubleshoot.

In my experience when an application fails or experiences degraded performance the finger pointing starts. Typically the first finger points at the network and the network team must prove that they are not the problem before real troubleshooting can begin. In a virtualized network environment there is a virtual network operating with a “ships in the night†approach invisible to the physical network. This places two points of troubleshooting and monitoring in place to show that the issues are not network related.

This brings us back to obfuscation. Network virtualization, or software based encapsulation, does not provide visibility into the physical network, or vice versa. STP, Routing and congestion issues are not visible to the virtual network, nor are issues with the virtual topology visible to the physical network. Keeping with the virtualization analogy this is a virtual machine running in VMware Player, not a hypervisor model.

Hopefully this clarifies my points for you,

Joe

Hi Joe,

Excellent discussion. I’m glad you brought up QoS and visibility.

With QoS you have two macro trends at work.

#1) Data center fabrics are beginning to follow Moore’s Law in terms of line rate port density. In a nutshell, it’s becomes more economical every year to build large fabrics with non-blocking or low oversubscription (3:1).

#2) The edge software switch gets a lot more QoS capable with every passing year. For example, edge vswitches can already classify, shape, mark, and enfore min/max bandwidth — per virtual port. Being at the edge software layer, the features and ganularity of these policies follow the workloads, and will only get more capable because after all its software.

Combined, there is diminshing contention in the physical fabric, while ganular QoS is enforced at the edge software switch. The physical fabric too may have a more general and infrequently changing QoS policy configured to match on the markings applied at the edge — which can be made consistent with a configuration management tool supplied the fabric vendor, or something like Puppet or Chef.

Moving on, Network Virtualization provides more visibility than ever before, not less. This is one area I’m particularly excited about. It’s all about data, and software. The more data you have, synthesized by software, the better visibility you have. Every port on the virtual network is backed by edge software on x86, and because of that there’s a tremendous amount of data you can generate, store, and correlate. Traffic volumes, physical network health, port counters, errors, per application profiles, just to name a few.

Taken a step further, the same software can gather and correlate data from the physical fabric. For example, if the edge software reports traffic issues between a pair of hypervisors or virtual machines, lets query the physical fabric on what links we should be looking at. The possibilities are endless, because ultimately it’s going to be platform independent software and data that will bring a sustainable clarity to operational visibility, not a proprietary solution with specific hardware requirements.

Cheers,

Brad

Brad,

Thanks for the continued discussion and the latest comment. I agree that as the virtual network progresses and gains more capabilities it becomes more of a viable option. This is especially true, as you point out; if you keep your network bandwidth ahead of the curve by refreshing frequent enough with non-blocking fabrics. Keeping that bandwidth ahead of the curve may be easier if your pushing more overhead onto the CPU helping to make the server the bottleneck moving forward.

Joe

> STP, Routing and congestion issues are not visible to the virtual network, nor are issues with the virtual topology visible to the physical network.

Physical network that supports NV does not *need* STP. Remember, STP is there to support extended L2 segments, which we don’t need anymore. Just this one thing (getting rid of STP) does several good things to our network: 1) makes all links forwarding (nothing is blocked – thus we increase available bandwidth and decrease need for QoS in the fabric); 2) allows us to ditch LAG/MLAG, which makes network more stable and easier to troubleshoot; and 3) the ditching of the STP further makes network less fragile.

Now, to the visibility of physical network congestion to the virtual network: NV can monitor forwarding performance of its tunnel PDUs, and detect congestion in undelying fabric. Granted, it may not be able to tell *where it is*, but consider this: what if you’re running your NV over WAN across multiple administrative domains? How much visibility do you have into congestion in service providers’ networks? I do see value in the ability to see where congestion is, but if that capability is only applicable to half of common use cases, I start to doubt its usefulness and go looking for next best alternative (as above – monitor PDU forwarding performance, as an example).

> when an application fails or experiences degraded performance the finger pointing starts

There is no unicorn magic in NV functionality – to point fingers away from physical network, it is mostly sufficient to demonstrate that physical network can successfully transfer packets between NV tunnel end points, which are um, just IP addresses attached to the IP forwarding fabric. You can use all your common troubleshooting tools to establish whether these IPs can talk to each other. BUM replication? Sure, check if TEP IPs can talk to the replication node’s IP. All good? Off to the NV team, with a fair level of confidence that the physical network isn’t at fault. This, in fact, is very similar to how service provider MPLS networks are troubleshooted (is this a real word?) today, and have been for many, many years. Seriously. How much visibility a VRF or a VPLS instance has into lower layers? Exactly zilch, because it was designed so; that’s what “V” in these acronyms stands for. 🙂 Does it stop SPs from offering them? Doesn’t look like it.

Don’t worry, mate. She’ll be right. 😉

Dmitri,

Thanks for taking the time to read and leave a comment. I think it’s dangerous to assume that adding network virtualization to a data center network means that STP will be turned off. Encapsulated traffic will most commonly be one of many traffic types running on a network, some quite possibly requiring Layer 2 communication. There is also a comfort level that will need to be adopted for network operators to trust an STP free L3 only topology. Not saying it’s a technical hurdle, instead an understanding and testing hurdle.

For the remainder of your comment I don’t necessarily disagree that it is achievable to build, maintain and troubleshoot an NV network. Instead I’d argue that it is adding layers of complexity to doing so.

SP WANs and data center networks are not equivalent and the comparison is misleading. An SP network is the core of the SPs business; a data center network is a transport for the applications that are the core. The budget, staff, monitoring/tools available to one are not available to the other.

I definitely appreciate your comments and expertise, thanks for the perspective!

Joe

> it’s dangerous to assume that adding network virtualization to a data center network means that STP will be turned off

I’m not saying it will be; just pointing out that it could lead to it, as VMs can do their L2 talking over NV VNs, rather than over VLANs.

> a comfort level that will need to be adopted for network operators to trust an STP free L3 only topology

I thought we already implicitly trusted it by using Internet and L3 VPNs.

> I’d argue that it is adding layers of complexity to doing so

If we consider that “S” in all “aaS” terms refers to “Services”, then I think I can argue that everybody providing anything “as a Service” is a Service Provider. And traditionally, at least in the data comms area, Service Provider infrastructure is comprised entirely of layers – in a reasonably simple case: fibre plant, WDM systems on top of it, TDM or packet on top of lambdas, MPLS packet on top of TDM or p2p Ethernet, and, finally, customer-facing IP or L2 Ethernet services on top of MPLS (for example). All layers rely on the lower layer for “service”, and in most cases have no idea what links/boxes comprise lower service, instead relying on OAM at the UNI, through which lower layers communicate the state of their service.

This, I believe, is what’s currently missing from the existing NV solutions: OAM for the “underlay” services. I hope I’m not the only one realising this, and intent on helping promote this understanding and getting something done about it.

> SP WANs and data center networks are not equivalent and the comparison is misleading

I wasn’t comparing; I was pointing out a what’s in my mind a totally valid use case – think of a hybrid cloud deployment, which would comprise of network services inside a DC, stitched together with WAN services to get connectivity to another DC or DCs, along with the DC networks in those other DCs.

> An SP network is the core of the SPs business

…and DC network of an aaS provider (be it a public provider or an Enterprise IT department) is one of the core components of their business, because without it there won’t be an “S” in their “aaS”.

> The budget, staff, monitoring/tools available to one are not available to the other

One can’t make an omelette without breaking some eggs. Sounds like an opportunity for those who plan to kick some aaS, no? 😉

Dmitri,

Another great set of points, thanks for taking the time to post them. I absolutely agree that if an L3 overlay is used it should be used for all workloads allowing STP to be turned off and freeing bandwidth. I hope that this is both understood and positioned by the Network Virtualization vendors. Just dumping an STT or VxLAN over a TRILL or other based fabric leads to double-encapsulation and overhead. As you point out L3 is not new and we’ve been trying to push it to the edge for years to alleviate STP.

I would agree that everybody providing IaaS is a Service Provider my point was more to what that service is. A traditional SP is providing the network as their service and therefore can sink more cost and expertise into it. A typical data center is providing IaaS, Paas, or SaaS and therefore has to spread the load across infrastructure and software.

I’m admittedly ignorant on OAM, is there a link you could point me to?

As far as your last point I agree 100%. Embrace change or get out of the way!

Joe

Hi Joe,

Can’t reply to your post below, as the comments platform ran out of levels 🙂

Re: OAM, you could start here: http://en.wikipedia.org/wiki/Operations,_administration_and_management (however it only lists links to Ethernet OAM, and doesn’t say much about SONET/SDH one). In any case, should be a good start.

Hey guys,

Very interesting arguments from both sides! I agree that layering had been at the very root of nearly every aspect of telecommunication and connectivity world. I think the difference between layering mechanisms comes from the fact of how much relative intelligence you are layering on top of. This is where the approaches differ.

Since we have examples of WAN technologies here, let continue down that path 🙂 Lets consider MPLS and MPLSoGRE. It is pretty obvious that MPLSoGRE comes to solve a problem of extending label switching across IP core without introducing MPLS technology on every intermediate node. Does it solve the problem? Sure does. Does it force MPLS to know that it runs on top of GRE tunnel? No, it doesn’t. Can I now run this on top of any IP capable router in the core? Yes, you can… yet, MPLSoGRE is not as popular as “native” MPLS… why!? Why wouldn’t I just design my carrier MPLS networks like that? Why can’t I take fast 40G/100G IP routers, place them as intermediate nodes and build large MPLS carrier networks leveraging MPLSoGRE? I bet that would be cheaper than buying MPLS capable routers on every hop end-to-end…

The reason is, by inserting intermediate layer of GRE (and IP), I am influencing the service delivery attributes of MPLS technology, even though I can still do all the bells and whistles of it at the edges of GRE tunnel. In simple worlds, I am diminishing the advantages of leveraging MPLS technology when I do not allow its characteristics to be enforced on a hop-by-hop bases.

People want intelligent communicate stacks, because such stacks allow lesser interference with higher layer communication protocols’ characteristics, which yields maximum advantages behind using those protocols in the first place. For example, how would one leverage MPLS TE, which relies on hop-by-hop intelligence, while operating on top of GRE tunnel, which makes that hop-by-hop intelligence inaccessible?

Data Center fabric is a tightly coupled environment, where end-to-end performance, visibility and troubleshooting play utmost important role in successful application delivery. If it is important in WAN, it is 10x more important in DC! It is where the hop-by-hop intelligence plays a crucial role. Hiding that intelligence with network virtualization, the way it’s being pushed right now to the market, is putting one’s environment at risk, which frankly outweighs the advantages of simpler provisioning model. Doing things faster does not necessarily mean doing things better…

Network virtualization could be taken to the next level to address the critical needs of the Data Center environment, but I just don’t see that direction being taken at this moment…

Would love to hear your thoughts 🙂

David

@DavidKlebanov

David,

Thanks for taking the time to stop by and leave a comment. Excellent points all around. I think yu and I are on the same page with the considerations required before implementing NV as it’s pitched on slides today.

Joe

> MPLSoGRE is not as popular as “native†MPLS… why!? Why wouldn’t I just design my carrier MPLS networks like that?

Because you don’t have to in most cases, where direct fibre, SONET/SDH/OTN or Ethernet links are available, and in operators’ case they mostly are everywhere. P2P Ethernet services are quite ubiquitous, too, and can be had in Metro, interstate or internationally. MPLSoGRE would be used for P-to-P or P-to-PE links, where direct L2 connectivity isn’t available.

> Why can’t I take fast 40G/100G IP routers, place them as intermediate nodes and build large MPLS carrier networks leveraging MPLSoGRE? I bet that would be cheaper than buying MPLS capable routers on every hop end-to-end…

All carrier-grade high speed platforms support MPLS, which is built into their ASICs, so the cost saving is unlikely. Also, if you’re suggesting replacing MPLS P routers with IP routers, then yes, you will lose TE and FRR.

> how would one leverage MPLS TE, which relies on hop-by-hop intelligence, while operating on top of GRE tunnel

The same way one leverages TE on top of P2P Ethernet or SONET/SDH circuits, which “under covers” consist of multiple sections and paths. GRE tunnel is a single hop, and you use it in your TE as such. However, if you replace your MPLS P routers with IP routers and run GRE tunnels between PEs, *then* you won’t have TE and FRR. But, as I explained above, it would not make much sense.

> I am diminishing the advantages of leveraging MPLS technology when I do not allow its characteristics to be enforced on a hop-by-hop bases

It is probably worth pointing out that MPLS *end-user* services, such as VPRN and VPLS, do not have visibility or access to the hop-by-hop MPLS functions. They *function* exactly like NV – local forwarding instances with end to end pseudowires (“tunnels” in NV) that run on top of MPLS (“IP” in NV) core (which may or may not leverage TE – irrelevant to the end-user services). The pseudowires do *benefit* from TE/FRR functions to improve end-user experience (pretty much only “availability” in most cases), but none of their visible functionality *depends* on these functions being present. A corner case perhaps would be when an operator built a separate set of TE LSPs for different classes of service, and implements selective forwarding. I’m not sure how common that deployment scenario is, as it smells of unnecessary complexity.

Now, this is where Plexxi’s solution brings something substantial to the table: it delivers enhanced TE without MPLS in your DC network, so you’re in even better shape than TE tricked-out SP’s MPLS networks (yes, I realise we’re starting to talk forklifts here). However, it is a distinct possibility that other Vendors’ SDN efforts may be able to create similar value here, too; so probably in some cases forklift upgrades won’t be necessary.

> how would one leverage MPLS TE, which relies on hop-by-hop intelligence, while operating on top of GRE tunnel, which makes that hop-by-hop intelligence inaccessible?

Hopefully I answered this question by the above.

> It is where the hop-by-hop intelligence plays a crucial role.

I would appreciate if you could elaborate on that role, as I’m struggling to figure it out. In my simple mind, as long as the IP fabric reliably forwards my IP packets, I’m golden; and if it stops doing so, I can figure out what’s wrong using my existing troubleshooting tools, as I described in one of my other replies to this blog.

David,

> Data Center fabric is a tightly coupled environment

That’s the status quo, and the very reason we are in this network provisioning mess right now. The solution to the server provisioning mess was *decoupling* (virtual server from physical), and the same solution is moving forward for the network with Network Virtualization — decoupling (virtual network from physical).

> If it is important in WAN, it is 10x more important in DC! It is where the hop-by-hop intelligence plays a crucial role

I’m struggling to make sense of this. The DC fabric is a very different environment than WAN. The DC fabric is a flat, equal cost multi-path structured topology with low/no oversubscription where bandwidth is cheap and plentiful. WAN topologies on the other hand are more arbitrary, asymmetrical, oversubscribed, and bandwidth is more scarce and expensive. How do you make the leap that “hop-by-hop intelligence” is 10x more important in the DC than the WAN?

As Dimitri asked, can you provide some examples of “hop-by-hop intelligence” that would be required in the physical DC fabric?

Cheers,

Brad

Hi guys,

I had stressed that point before, I think that conditioning people to think that server virtualization is analogous with network virtualization is just wrong. Server virtualization platforms leverage hypervisors, which in turn schedule real hardware resources for consumption by the virtual machines. In contrast, network virtualization, as being presented today, creates a totally abstracted container with it’s own control, data and management plane. The motivation behind creating those containers (overlays) is obvious, however, in my view, leveraging such approach only slows down the bleeding, providing no more than temporary relief. Negative customers’ experiences I hear about many times when testing such solution only re-enforce my thinking that the provisioning simplicity offered by this model is not enough to justify day-2 operational challenges. Having said that, not every deployment is bound to fail. To your earlier point, DC bandwidth is becoming cheaper and Clos architecture provides plenty of it, however it’s not just about bandwidth.

Today’s network virtualization technology tries to pull us back to the very basic primitives of network communications. If I can send a successful probe/ping between the tunnel endpoints, I am golden… not my problem anymore. If I can snoop packets at the host and trace TCP SYN and ACK counters, my troubleshooting is done. Lets learn from the past experiences and not turn a new page each time around…

I would also encourage server/virtualization admins, who would most likely be designated as primary consumers of network virtualization technology, get more intimately acquainted with the principles of networking. Don’t just take my word for it, as an example, in IP Telephony world long time ago there was a saying “if you can ping it, you can ring it”. We had evolved beyond that by leveraging services provided by the underlying infrastructure to deliver solid Unified Communication experience.

Thank you,

David

David,

The important commonality of Server Virtualization to Network Virtualization is *decoupling*, that’s what matters. Decoupling provides automation and mobility on commonly available hardware with architecture independence. Are there some differences in implementation details? Of course.

A server is piece of hardware, and a network is a *service*, so of course Server Virtualization and Network Virtualization are different in that sense. Server Virtualization must emulate hardware, because the workload expects to see an x86 machine. The network, on the other hand, is *not* hardware, it’s a service, which for the last 30 years has been delivered by hardware (prior to decoupling). Network Virtualization doesn’t need to emulate hardware — the workload consuming the network service doesn’t care one bit about the hardware or form factor of the devices forwarding its traffic.

The similarity lies in the fact that compute and network are decoupled into a software layer at the edge host (the hypervisors and its vswitch). The fact is, the first network device the workload is attached to is a vswitch on its own hypervisor, so it makes perfect sense emulate the desired network services right there at the same edge software layer and achieve the coveted *decoupling*.

Network Virtualization makes sense because of Server Virtualization, a virtual network for virtual servers. We’ve been eating Peanut Butter (server virtualization) sandwiches for the last decade — tastes pretty good, but something was missing. Now it’s time to add the Jelly (network virtualization).

Cheers,

Brad

Brad,

As I’ve said before, the only virtualization analogy that applies to Network Virtualization is a VM running on VM Player. Yeah it’s virtualized, yeah it’s running, but it has no visibility or control of it’s effect on the underlying system or the underlying systems effect on it.

Joe

Brad .. check summary below from the January SDNCentral @Churchill Club. Customer is speaking on need for more visibility down to queues and counters.

Thomas

http://www.sdncentral.com/market/sdn-churchill-club-long-live-sdn/2013/01/

View on L2 versus L3 hooks into network? Najam’s view is that L2 is dead and that he only cares about L3 networks. Martin concurred. In reality, what Najam really wants is the switches to just report visibility into queues, local resource utilization etc up to a central control plane and for that control plane to make all the intelligent decisions. Today, all he gets is SNMP. And as he eloquently put it “SNMP is dead, long live SNMPâ€.

Thomas,

Visibility into queues, link utilization, and local resources … Sure, why not. That doesn’t preclude decoupling. At the end of the day it’s just data collected, stored, and synthesized by software. If that’s the coveted “hop-by-hop intelligence” we’re worried about, Network Virtualization doesn’t break that.

Cheers,

Brad

David,

Thanks for the insight; but may I please gently press the original question, as the answer intrigues me greatly?

What is the crucial role that hop by hop intelligence plays in the DC networks?

Cheers,

— Dmitri

Hey there! This is my first comment here so I just wanted to give a quick shout out

and tell you I really enjoy reading your posts. Can you suggest any other

blogs/websites/forums that go over the same subjects?

Thanks a lot!

05/09/2012I truly wanted to jot down a small conmmet to be able to appreciate you for some of the nice hints you are giving out at this website. My extensive internet search has at the end been honored with reasonable knowledge to write about with my best friends. I d assume that we visitors actually are very blessed to be in a remarkable place with many lovely people with good suggestions. I feel rather blessed to have encountered your entire site and look forward to some more entertaining times reading here. Thanks a lot once again for everything.

As a Newbie, I am constantly searching online for articles that can be of assistance to me.

Thank you

Hello

Thanks for posting this amazing blog. It consists of very useful information. The language you used in this is very simple & easy to understood. Post some more blogs related to “network design and implementation “.

Thanks & Regards.