High availability, disaster recovery, business continuity, etc. are all key concerns of any data center design. They all describe separate components of the big concept: ‘When something does go wrong how do I keep doing business.’

Very public real world disasters have taught us as an industry valuable lessons in what real business continuity requires. The Oklahoma City bombing can be at least partially attributed to the concepts of off-site archives and Disaster Recovery (DR.) Prior to that having only local or off-site tape archives was commonly acceptable, data gets lost I get the tape and restore it. That worked well until we saw what happens when you have all the data and no data center to restore to.

September 11th, 2001 taught us another lesson about distance. There were companies with primary data centers in one tower and the DR data center in the other. While that may seem laughable now it wasn’t unreasonable then. There were latency and locality gains from the setup, and the idea that both world class engineering marvels could come down was far-fetched.

With lessons learned we’re now all experts in the needs of DR, right up until the next unthinkable happens ;-). Sarcasm aside we now have a better set of recommended practices for DR solutions to provide Business Continuity (BC.). It’s commonly acceptable that the minimum distance between sites be 50KM away. 50KM will protect from an explosion, a power outage, and several other events, but it probably won’t protect from a major natural disaster such as earthquake or hurricane. If those are concerns the distance increases, and you may end up with more than two data centers.

There are obviously significant costs involved in running a DR data center. Due to these costs the concept of running a ‘dark’ standby data center has gone away. If we pay for: compute, storage, and network we want to be utilizing it. Running Test/Dev systems or other non-frontline mission critical applications is one option, but ideally both data centers could be used in an active fashion for production workloads with the ability to failover for disaster recovery or avoidance.

While solutions for this exist within the high end Unix platforms and mainframes it has been a tough cookie to crack in the x86/x64 commodity server system market. The reason for this is that we’ve designed our commodity server environments as individual application silos directly tied to the operating system and underlying hardware. This makes it extremely complex to decouple and allow the application itself to live resident in two physical locations, or at least migrate non-disruptively between the two.

In steps VMware and server virtualization. With VMware’s ability to decouple the operating system and application from the hardware it resides on. With the direct hardware tie removed, applications running in operating systems on virtual hardware can be migrated live (without disruption) between physical servers, this is known as vMotion. This application mobility puts us one step closer to active/active datacenters from a Disaster Avoidance (DA) perspective, but doesn’t come without some catches: bandwidth, latency, Layer 2 adjacency, and shared storage.

The first two challenges can be addressed between data centers using two tools: distance and money. You can always spend more money to buy more WAN/MAN bandwidth, but you can’t beat physics, so latency is dependent on the speed of light and therefore distance. Even with those two problems solved there has traditionally been no good way to solve the Layer 2 adjacency problem. By Layer 2 adjacency I’m talking about same VLAN/Broadcast domain, i.e. MAC based forwarding. Solutions have existed and still exist to provide this adjacency across MAN and WAN boundaries (EoMPLS and VPLS) but they are typically complex and difficult to manage with scale. Additionally these protocols tend to be cumbersome due to L2 flooding behaviors.

Up next is Cisco with Overlay Transport VLANs (OTV.) OTV is a Layer 2 extension technology that utilizes MAC routing to extend Layer 2 boundaries between physically separate data centers. OTV offers both simplicity and efficiency in an L2 extension technology by pushing this routing to Layer 2 and negating the flooding behavior of unknown unicast. With OTV in place a VLAN can safely span a MAN or WAN boundary providing Layer 2 adjacency to hosts in separate data centers. This leaves us with one last problem to solve.

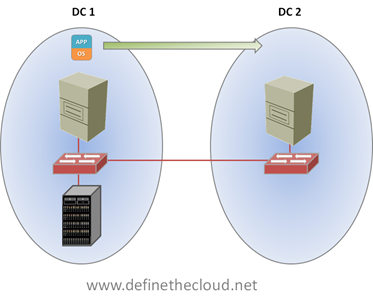

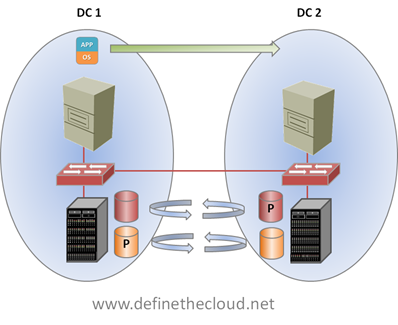

The last step in putting the plumbing together for Long Distance vMotion is shared storage. In order for the magic of vMotion to work, both the server the Virtual Machine (VM) is currently running on, and the server the VM will be moved to must see the same disk. Regardless of protocol or disk type both servers need to see the files that comprise a VM. This can be accomplished in many ways dependent on the storage protocol you’re using, but traditionally what you end up with is one of the following two scenarios:

In the diagram above we see that both servers can access the same disk, but that the server in DC 2, must access the disk across the MAN or WAN boundary, increasing latency and decreasing performance. The second option is:

In the next diagram shown above we see storage replication at work. At first glance it looks like this would solve our problem, as the data would be available in both data centers, however this is not the case. With existing replication technologies the data is only active or primary in one location, meaning it can only be read from and written to on a single array. The replicated copy is available only for failover scenarios. This is depicted by the P in the diagram. While each controller/array may own active disk as shown, it’s only accessible on a single side at a single time, that is until Vplex.

EMC’s Vplex provides the ability to have active/active read/write copies of the same data in two places at the same time. This solves our problem of having to cross the MAN/WAN boundary for every disk operation. Using Vplex the virtual machine data can be accessed locally within each data center.

Putting both pieces together we have the infrastructure necessary to perform a Long Distance vMotion as shown above.

Summary:

OTV and Vplex provide an excellent and unique infrastructure for enabling long-distance vMotion. They are the best available ‘plumbing’ for use with VMware for disaster avoidance. I use the term plumbing because they are just part of the picture, the pipes. Many other factors come into play such as rerouting incoming traffic, backup, and disaster recovery. When properly designed and implemented for the correct use cases OTV and Vplex provide a powerful tool for increasing the productivity of Active/Active data center designs.

Great post Joe!

Similar to the server side of things, it seems we’re making a big circle here as well. Where mainframes were once the big thing, we broke that all out into one app per server, in walks virtualization and we’re coexisting all over again.

Similar to OTV, it was once all about segmenting things out via VLANs. I remember working for a company where we had a different VLAN for everything (one dedicated to Citrix, one to AD, one to Unix servers, users, etc). Some I think we created just because we could 😉 but for some there were valid reasons.

Do you see any special design considerations when thinking about larger L2 domains that OTV naturally brings us? I’m not saying we’re going to stop segmenting things via VLANs, but L2’s will be larger due to technologies such as OTV.

Just to throw it out there, I think the same can be said about VDI environments. Again, referencing my earlier days in IT, in large shops, we had a VLAN per floor for the desktop environment. Bringing those desktops all back together and hosting them out of the datacenter, I don’t see a need for the separation unless there are specific security requirements.

Chris,

Thanks foir reading the blog and the feedback. As far as VLANs are concerned, don’t forget they aren’t really about security, their about shrinking the L2 domain. Layer 2 domains do a lot of flooding traffic, both multi-cast and broadcast are handled by a switch in the same way at Layer 2: replicated to every port except the one it came in on. This means every ARP. DHCP request, etc gets flooded through the network. This becomes cumbersome and costly because each NIC/Port must read the header of each frame to determine if the destination MAC is it’s own. VLANs were designed to alleviate this prolem and shrink the broadcast domain.

Since the original implementation we’ve begun to utilize VLANs for security purposes such as DMZ, and things like application seperation, but shrinking the broadcast domain is still important.

Joe

Hi there! Great post! I saw the update on Twitter so I decided to read the article.

I understand routed vmotion will not be supported by VMWare yet, right? Do you know a success case in which OTV was used for non-LAN distances? It’s not been tried in my country yet and though we think it would work we are unsure to implement it since we have had no references at all. Will appreciate your feedback.

Thanks for sharing all this, you have an awesome blog!

Noelle,

Thanks for reading, I’m glad you like the content! I checked with some colleagues and got some great feedback from @bradhedlund and @ccie5851 on twitter:

OTV is not distance dependant, meaning it can stretch MAN or WAN boundaries as needed, and is beiong used effectively across long-distances. That being said OTV is only stretching the Layer2 domain, the applications you run on top of OTV must be taken into consideration. If you’re planning on using OTV for things like clustering, or vMotion you’ll need to ensure latency meets application requirements. In use cases like VMware Site Recovery Manager (SRM) or other Disaster Recovery (DR) purposes latency may be less of an issue.

The bottom line is OTV will extend you’re Layer 2 and is distance agnostic. Application requirements will determine what distances and latencies are appropriate across the OTV extension.

Joe

This scenario uses VPLEX Geo, right? Otherwise, you still have the distance limitation.

Brandon,

The scenario above is not using Vplex Geo as it’s not yet shipping. As such ou are correct that distance limitations will come into play due to the syncronous replication nature of the current Vplex deployment model.

Joe

Hi Joe,

Thanks for sharing this post.

As you mentioned there are really two challenges in maintaining active/active data centers. The first is the data network while the second is the storage network. With specific reference to storage any thought to the options around extending the SAN/FC network between two data centers? I guess I’m curious as to what other architects are doing. Are they extending their SAN/FC networks over dark fiber keeping the distance under 50km, under 80km? Are they utilizing a DWDM network? Are they utilizing some type of protocol router or WAN accelerator to encapsulate FC frames into IP or Ethernet?

Thanks!

Michael,

Typically the SAN extension methodolgy used depends a lot on what storage you’re using and what you’re doing with it. Many storage vendors have replication technologies that utilize IP natively therefore having no requirement for SAN extension. If the storage does not support this, or you require active/active access to SAN resources from both data centers then SAN extension is the way to go.

As far as methodolgies go the things you mention are the most common:

Dark Fiber for short distances, CWDM/DWDM for performance /throughput with longer distances and FCIP for low cost extension over an IP network. FCIP does not require an external device or router if your using an FC switch that has the built in capability such as the Cisco MDS 9222i. FCIP as a whole works well as long as it is properly designed and the pipe between data centers is correctly tuned for the FCIP traffic.

Joe

Awesome blog! Is your theme custom made or did you download it from somewhere?

A design like yours with a few simple adjustements

would really make my blog jump out. Please let me know where you got your design. Appreciate it

http://zhenxiang.co/forum.php?mod=viewthread&tid=78415&fromuid=12147

Very nice write-up. I definitely appreciate this site.

Keep writing!

Learning a bit more about how your hormones can affect your asthma symptoms can be an important first step toward getting it under control.

To eliminated the treat from the cockroaches you should keep your house exceptionally clean and then the insects would leave your home

themselves. Some common asthma triggers include GERD (Gastroesophageal

Reflux Disease), inhaling dry and cold air, exercise, tobacco

smoke, upper respiratory infections, pets, pollen, dust and mold

mites.

After exploring a handful of the blog posts on your website, I honestly like

your technique of writing a blog. I added it to my bookmark webpage

list and wilkl be checking back soon. Please visit myy website as well and tell me how you feel.

Leida is the name I love be called with aand that i find it irresistible.

The job he’s been occupying for many years can be a computer operator andd the man will not change it anytime

soon. Puerto Ricoo is how he’s been living for

a long time and that he loves daily living there. His wife doesn’t enjoy it the way performing

but what he takes pleasure in doing is aromatherapy and that he wouldn’t stop doing

it. See what’s new on my small website here: https://www.zotero.org/cheaplaptops