When discussing the underlying technologies for cloud computing topologies virtualization is typically a key building block. Virtualization can be applied to any portion of the data center architecture from load-balancers to routers, and from servers to storage. Server virtualization is one of the most widely adopted virtualization technologies, and provides a great deal of benefits to the server architecture.Â

One of the most common challenges with server virtualization is the networking. Virtualized servers typically consist of networks of virtual machines that are configured by the server team with little to no management/monitoring possible from the network/security teams. This causes inconsistent policy enforcement between physical and virtual servers as well as limited network functionality for virtual machines.

Virtual Networks

The separate network management models for virtual servers and physical servers presents challenges to: policy enforcement, compliance, and security, as well as adds complexity to the configuration and architecture of virtual server environments. Due to this fact many vendors are designing products and solutions to help draw these networks closer together.

The following is a discussion of three products that can be used for this, HP’s Flex-10 adapters, Cisco’s Nexus 1000v and Cisco’s Virtual interface Card (VIC.)Â

This is not a pro/con or discussion of which is better, just an overview of the technology and how it relates to VMware.

HP Flex-10 for Virtual Connect:

Using HP’s Virtual Connect switching modules for C-Class blades and either Flex-10 adapters or Lan-On-Motherboard (LOM) administrators can ‘partition the bandwidth of a single 10Gb pipeline into multiple “FlexNICs.†In addition, customers can regulate the bandwidth for each partition by setting it to a user-defined portion of the total 10Gb connection. Speed can be set from 100 Megabits per second to 10 Gigabits per second in 100 Megabit increments.’ (http://bit.ly/boRsiY)

This allows a single 10GE uplink to be presented to any operating system as 4 physical Network Interface Cards (NIC.)

FlexConnect

In order to perform this interface virtualization FlexConnect uses internal VLAN mappings for traffic segregation within the 10GE Flex-10 port (mid-plane blade chassis connection from the Virtual Connect Flex-10 10GbE interconnect module and the Flex-10 NIC device.) Each FlexNIC can present one or more VLANs to the installed operating system.

Some of the advantages with this architecture are:

- A single 10GE link can be divided into 4 separate logical links each with a defined portion of the bandwidth.

- More interfaces can be presented from fewer physical adapters which is extremely advantageous within the limited space available on blade servers.

When the installed operating system is VMware this allows for 2x10GE links to be presented to VMware as 8x separate NICs and used for different purposes such as vMotion, Fault Tolerance (FT), Service Console, VM kernel and data.

The requirements for Flex-10 as described here are:

- HP C-Class blade chassis

- VC Flex-10 10GE interconnect module (HP blade switches)

- Flex-10 LOM and or Mezzanine cards

Cisco Nexus 1000v:

‘Cisco Nexus® 1000V Series Switches are virtual machine access switches that are an intelligent software switch implementation for VMware vSphere environments running the Cisco® NX-OS operating system. Operating inside the VMware ESX hypervisor, the Cisco Nexus 1000V Series supports Cisco VN-Link server virtualization technology to provide:

• Policy-based virtual machine (VM) connectivity

• Mobile VM security and network policy, and

• Non-disruptive operational model for your server virtualization, and networking teams’(http://bit.ly/b4JJX5.)

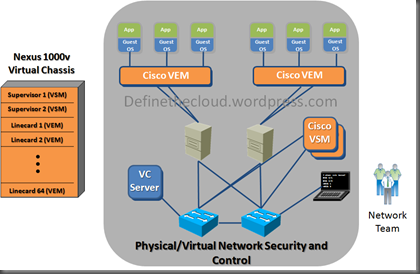

The Nexus 1000v is a Cisco software switch which is placed in the VMware environment and provides physical type network control/monitoring to VMware virtual networks. The Nexus 1000v is comprised of two components the Virtual Supervisor Module (VSM) and Virtual Ethernet Module (VEM.) The Nexus 1000v does not have hardware requirements and can be used with any standards compliant physical switching infrastructure. Specifically the upstream switch should support 802.1q trunks and LACP.

Cisco Nexus 1000v

Using the Nexus 1000v Network teams have complete control over the virtual network and manage it using the same tools and policies used on the physical network.

Some advantages of the 1000v are:

- Consistent policy enforcement for physical and virtual servers

- vMotion aware policies migrate with the VM

- Increased, security, visibility and control of virtual networks

The requirements for Cisco Nexus 1000v are:

- vSphere 4.0 or higher

- Enterprise + VMware license

- Per physical host CPU VEM license

- Virtual Center Server

Cisco Virtual interface Card (VIC):

The Cisco VIC provides interface virtualization similar to the Flex-10 adapter. One 10GE port is able to be presented to an operating system as up to 128 virtual interfaces depending on the infrastructure. ‘The Cisco UCS M81KR presents up to 128 virtual interfaces to the operating system on a given blade. The virtual interfaces can be dynamically configured by Cisco UCS Manager as either Fibre Channel or Ethernet devices’ (http://bit.ly/9RT7kk.)

Fibre Channel interfaces are known as vFC and Ethernet interfaces are known as vEth, they can be used in any combination up to the architectural limits. Currently the VIC is only available for Cisco UCS blades but will be supported on UCS rack mount servers as well by the end of 2010. Interfaces are segregated using an internal tagging mechanism known as VN-Tag which does not use VLAN tags and operates independently of VLAN operation.

Virtual Interface Card

Each virtual interface acts as if directly connected to a physical switch port and can be configured in Access or Trunk mode using 802.1q standard trunking. These interfaces can then be used by any operating system or VMware. For more information on their use see my post Defining VN-Link (http://bit.ly/ddxGU7.)

VIC Advantages:

- Granular configuration of multiple Fibre Channel and Ethernet ports on one 10GE link.

- Single point of network configuration handled by a network team rather than a server team.

Requirements:

- Cisco UCS B-series blades (until C-Series support is released)

- Cisco Fabric interconnect access layer switches/managers.

Summary:

Each of these products has benefits in specific use cases and can reduce overhead and/or administration for server networks. When combining one or more of these products you should carefully analyze the benefits of each and identify features that may be sacrificed by combining the two. For instance using the Nexus 1000v along with FlexConnect adds a Server administered network management layer in between the physical network and virtual network.

Nexus 1000v with Flex-10

Comments and corrections are always welcome.

Joe, I really appreciate the detailed analysis. There is a lot of confusion about these technologies in the field. Interoperability of these competitive technologies seemed to be very challenging. Looking forward to seeing the designs around these.

Joe,

One more advantage of the Cisco VIC is that you do not need to “define portions of bandwidth” to each virtual adapter like you need to do with Flex-10 FlexNICs.

Imagine if you had a 10 lane highway where lanes 1 & 2 where only for vMotion traffic. If lanes 3-10 are very congested, and lanes 1 & 2 are wide open, too bad you can’t use those lanes. Inversely, if lanes 1 & 2 are congested with vMotion traffic, and lanes 3-10 are wide open, too bad you can’t use them, vMotion is stuck in lanes 1-2.

Those are the highway rules of Flex-10, not Cisco VIC.

The Cisco VIC highway says that while lanes are still marked for certain traffic types, any traffic can drive in any lane if its open. A simple rule states that if you are using somebody else’s lane and that lanes get congested you must go back to your own lanes.

This is the fair sharing of bandwidth employed Cisco UCS and the Cisco VIC, other wise know as “Quality of Service” QoS.

By the way, I love the last graphic in this post — very true.

Nice post.

Cheers,

Brad

(Cisco)

@Brad

Flex-10 doesnt work like this. I can screenshot it. You dont need to cap or set a static rate.

Jeffrey,

My understanding is as Brad states, that the limit is actually capped, the GUI doesn’t intuitively state this but it happens under the covers. If you have documentation to the contrary I’d love to see it, I’m always open to learning something new.

Joe

Will this configuration applies to Cisco 6509 with WS-X6704-10GE blade cards?

Brad,

Thanks for the reply, and additional info QoS configuration and the use of the Bandwidth Management features of DCB (http://bit.ly/c3HB1N) are excellent and align with the network policy consistency message of the VIC.

LinkWaves,

All of the technologies described above are currently blade server I/O cards or VMware virtual switches, and interaction with a Cisco Catalalyst 6509 would be as follows:

HP Flex-10:

The Flex-10 adapter requires use of the Virtual Connect Flex blade switches which could be uplinked to the catalyst 6509.

VIC:

Currently the VIC is only supported within UCS blades which use the Cisco Fabric Interconnects as their access layer connectivty, the fabric interconnect could be uplinked to a 6509.

Nexus 1000v:

The Nexus 1000v is a softweare switch that resides in the VMware Hyppervisor. It can be connected using any standards based Ethernet switch including the Catalyst 6509.

The Catalyst series will not be able to directly manage a VIC due to the tagging mechanism used (VN-Tag.) VIC equipped C-Series servers (future)will connect to a Fabric Interconnect.

[…] One of the major challenges when looking into cloud architectures is the organizational/process shifts required to make the transition. Technology refreshes seem daunting but changing organizational structure and internal auditing/change management can make rip-and-replace seem like child’s play. In this post I’ll discuss some ways in which to simplify the migration into cloud (there’s a vaporize your silos pun hidden around here somewhere.) For more background see my post on barriers to adoption for cloud computing (http://www.definethecloud.net/?p=73.) […]

I will right away snatch your rss as I can’t find your e-mail subscription link or newsletter service.

Do you’ve any? Kindly let me know so that I could subscribe.

Thanks.

Hi, the whole thing is going nicely here and ofcourse every one is sharing data, that’s truly good, keep up writing.

If some one needs to be updated with latest technologies

then he must be pay a visit this website and be up to date daily.

I’m gone to inform my little brother, that he should also pay a quick visit this website on regular basis to take updated from hottest gossip.

I could watch Scl’edhnris List and still be happy after reading this.

Deep thought! Thanks for counbirtting.

That’s really thniknig at a high level

I got this site from my friend who shared with me on the topic of this web site

and now this time I am browsing this site and reading very informative

articles at this time.

I think you’ve just captured the answer perfectly

Frappuccino new Light flavors, Mocha and Vanilla. See a video recap of the Mercedes-Benz Fashion Week and get $1 off coupon for Frappuccino over at

Hello, you used to write excellent, but the last few posts have been kinda boring… I miss your super writings. Past few posts are just a bit out of track! come on!

Galaktisch gute Nachrichten von Entwickler DICE: Die offene Beta von Star Wars:

Battlefront wird um einen weiteren Tag verlängert.

tu dio c*** diventerai proprio come serni e albricci, diavolo merda. Non mi sorprenderei se qualche satanista chiedesse la par condicio e facesse eliminare anche le ultime parole.

Die Yoga Ernährung kannst du so einfach oder raffiniert halten, wie es dir beliebt.

Lass stets einen Rest auf Deinen Teller übrig, damit Dein Gehirn darauf programmiert wird,

mit dem Essen aufzuhören sobald Du körperlich satt bist.

Mir ist völlig klar, dass mein Stresspegel nicht hilfreich ist für

den Blutdruck und arbeite daran natürlich im Rahmen meiner Traumatherapie.

I am actually thankful to the holder of this web page

who has shared this wonderful article at at this place.