Virtualization is a key piece of modern data center design. Virtualization occurs on many devices within the data center, conceptually virtualization is the ability to create multiple logical devices from one physical device. We’ve been virtualizing hardware for years: VLANs and VRFs on the network, Volumes and LUNs on storage, and even our servers were virtualized as far back as the 1970s with LPARs. Server virtualization hit mainstream in the data center when VMware began effectively partitioning clock cycles on x86 hardware allowing virtualization to move from big iron to commodity servers.

This post is the next segment of my Data Center 101 series and will focus on server virtualization, specifically virtualizing x86/x64 server architectures. If you’re not familiar with the basics of server hardware take a look at ‘Data Center 101: Server Architecture’ (http://www.definethecloud.net/?p=376) before diving in here.

What is server virtualization:

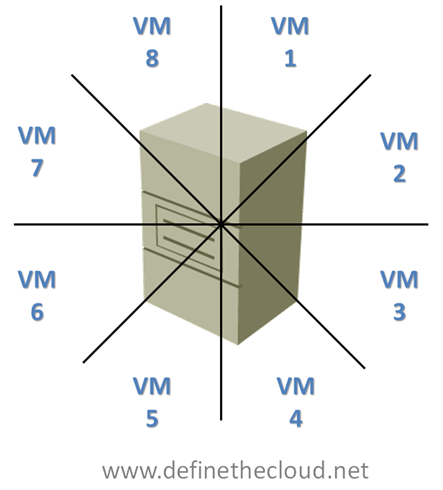

Server virtualization is the ability to take a single physical server system and carve it up like a pie (mmmm pie) into multiple virtual hardware subsets.

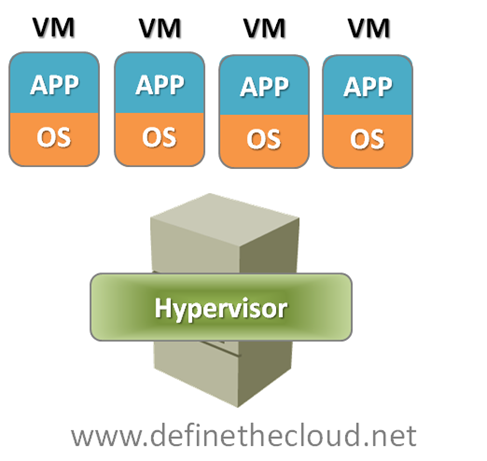

Each Virtual Machine (VM) once created, or carved out, will operate in a similar fashion to an independent physical server. Typically each VM is provided with a set of virtual hardware which an operating system and set of applications can be installed on as if it were a physical server.

Each Virtual Machine (VM) once created, or carved out, will operate in a similar fashion to an independent physical server. Typically each VM is provided with a set of virtual hardware which an operating system and set of applications can be installed on as if it were a physical server.

Why virtualize servers:

Virtualization has several benefits when done correctly:

- Reduction in infrastructure costs, due to less required server hardware.

- Power

- Cooling

- Cabling (dependant upon design)

- Space

- Availability and management benefits

- Many server virtualization platforms provide automated failover for virtual machines.

- Centralized management and monitoring tools exist for most virtualization platforms.

- Increased hardware utilization

- Standalone servers traditionally suffer from utilization rates as low as 10%. By placing multiple virtual machines with separate workloads on the same physical server much higher utilization rates can be achieved. This means you’re actually using the hardware your purchased, and are powering/cooling.

How does virtualization work?

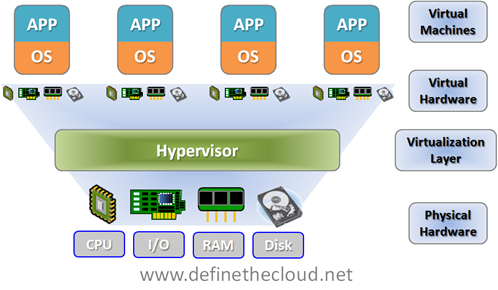

Typically within an enterprise data center servers are virtualized using a bare metal installed hypervisor. This is a virtualization operating system that installs directly on the server without the need for a supporting operating system. In this model the hypervisor is the operating system and the virtual machine is the application.

Each virtual machine is presented a set of virtual hardware upon which an operating system can be installed. The fact that the hardware is virtual is transparent to the operating system. The key components of a physical server that are virtualized are:

- CPU cycles

- Memory

- I/O connectivity

- Disk

At a very basic level memory and disk capacity, I/O bandwidth, and CPU cycles are shared amongst each virtual machine. This allows multiple virtual servers to utilize a single physical servers capacity while maintaining a traditional OS to application relationship. The reason this does such a good job of increasing utilization is that your spreading several applications across one set of hardware. Applications typically peak at different times allowing for a more constant state of utilization.

For example imagine an email server, typically an email server is going to peak at 9am, possibly again after lunch, and once more before quitting time. The rest of the day it’s greatly underutilized (that’s why marketing email is typically sent late at night.) Now picture a traditional backup server, these historically run at night when other servers are idle to prevent performance degradation. In a physical model each of these servers would have been architected for peak capacity to support the max load, but most of the day they would be underutilized. In a virtual model they can both be run on the same physical server and compliment one another due to varying peak times.

Another example of the uses of virtualization is hardware refresh. DHCP servers are a great example, they provide an automatic IP addressing system by leasing IP addresses to requesting hosts, these leases are typically held for 30 days. DHCP is not an intensive workload. In a physical server environment it wouldn’t be uncommon to have two or more physical DHCP servers for redundancy. Because of the light workload these servers would be using minimal hardware, for instance:

- 800Mhz processor

- 512MB RAM

- 1x 10/100 Ethernet port

- 16Gb internal disk

If this physical server were 3-5 years old replacement parts and service contracts would be hard to come by, additionally because of hardware advancements the server may be more expensive to keep then to replace. When looking for a refresh for this server, the same hardware would not be available today, a typical minimal server today would be:

- 1+ Ghz Dual or Quad core processor

- 1GB or more of RAM

- 2x onboard 1GE ports

- 136GB internal disk

The application requirements haven’t changed but hardware has moved on. Therefore refreshing the same DHCP server with new hardware results in even greater underutilization than before. Virtualization solves this by placing the same DHCP server on a virtualized host and tuning the hardware to the application requirements while sharing the resources with other applications.

Summary:

Server virtualization has a great deal of benefits in the data center and as such companies are adopting more and more virtualization every day. The overall reduction in overhead costs such as power, cooling, and space coupled with the increased hardware utilization make virtualization a no-brainer for most workloads. Depending on the virtualization platform that’s chosen there are additional benefits of increased uptime, distributed resource utilization, increased manageability.

Nice. First blog to put limitation and reasons for not adapting cloud in front of people, in great detail. I’m one of them who doesn’t despise cloud but the one who can’t overlook the drawbacks. I might not have much experience in field but I understand technical scenarios and situation very well. In my opinion cloud is great service for individual technology user but companies should not adapt cloud since it’s about their data and policies. No matter how trustworthy cloud service is I’d never EVER hand over my company’s strings to them. They are for better security and stability but what if the cloud service itself is compromised? You can’t blame them nor overcome your loss. I really trusted the IT companies before cloud technology made the debut. But now since cloud is being used by most of the companies and services I just can’t make myself to trust them for my data. But as I said it’s my personal opinion. Really nice blog.