** I earn a paycheck as a Principle Engineer for the Cisco business unit responsible for ACI, Nexus 9000, and Nexus 3000. This means you may want to simply consider anything I have to say on the subject biased and or useless. Your call. **

I recently listened through a 30 minute SDN analysis from Greg Ferro who considers himself to be a networking industry expert. In it he goes through his opinions of several SDN approaches and provides several guesses as to where things will be. One of the things that struck me during the recording was that he describes Cisco’s Application Centric Infrastructure (ACI) as a platform for lock-in. While he’s right on the platform part, he’s missing the mark on the lock-in. This is not an attack on Greg, if someone who considers themselves to be an in-the-know expert on the subject still believes this, maybe we haven’t gotten the message out in the right way. Let’s try and rectify that here.

What is ACI:

This is a little of a tough question, because ACI really is an application connectivity platform. ACI can be:

- An automated network fabric, that alleviates admins from dealing with route, VLAN, port, firmware, etc. provisioning on a daily basis.

- A security appliance that acts as a line-rate stateless firewall while automating virtual and physical service appliances from any vendor to provide advanced L4-7 services.

- A policy automation engine. This is where we will focus in this blog.

ACI – A Policy Automation Engine:

Let’s start with a quick definition of Policy for these purposes: Policy is the requirements of data as it traverses the network. Policy can be thought of with two mindsets. Some examples of this are below, they are in no particular order, and not intended to match by column.

|

Business/Application Mindset |

Infrastructure/Operations Mindset |

| Security | VLAN |

| Compliance | Subnet |

| Governance | Firewall Rule |

| Risk | Load-Balancing |

| Geo-dependancy | ACL |

| Application Tiers | Port-config |

| SLAs | IP/MACsec |

| User Experience | Redundancy |

-

No direct translation between business requirements and infrastructure capabilities/configuration.

-

Today it’s manual configuration on disparate devices, typically via a CLI.

As a policy automation engine ACI looks to alleviate that by mapping application level language on policy, like the left column, to automated infrastructure provisioning using the constructs in the right column. In this usage ACI can be deployed in a much different fashion than the network fabric it’s more traditionally thought of as.

The lack of understanding around this model for ACI revolves around three misconceptions or misunderstandings:

-

ACI requires all Cisco Nexus 9000 hardware.

-

ACI cannot integrate with other switching products.

-

ACI’s integration with L4-7 service devices requires management of those devices.

With that in mind, let’s take a look at deploying ACI into an existing data center switching infrastructure as a policy automation appliance.

ACI Requires all Nexus 9000 Hardware:

ACI does not require all devices to be Nexus 9000 based. ACI is based on a combination of hardware and software. This is a balanced approach of what is done best where. Software control systems provide agility, while hardware can provide acceleration for that software along with performance, scale, etc. Because of this ACI has a small set of controller and switching components. This hardware does not need to replace any other hardware, and can simply be added with a natural data center expansion, then integrated into the existing network. In fact, this is the way the majority of ACI customers are deploying ACI today.

Here is a breakdown of the ACI requirements for a production environment:

-

A controller cluster consisting of 3 Application Policy Infrastructure Controller (APIC) appliances.

-

2x Spine switches which can be Nexus 9336 36x 40G ACI spine switches.

-

2x Leaf switches which can be Nexus 9372 switches which have 48x 10G and 6x 40G ports.

That is the complete initial and final requirement to simply use ACI as a policy automation engine. From there, policy on any network equipment can be automated. The system can then optionally scale with more Nexus 9000, or other switching solutions can be used for connectivity.

Integrating ACI with other switching products:

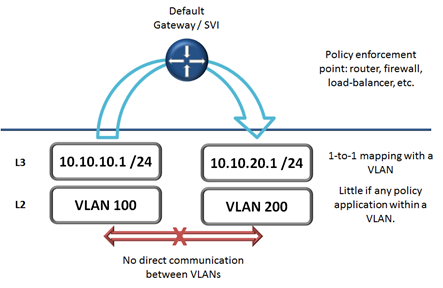

First, we need to understand where policy is enforced on a traditional network. For reference take a look at the graphic below.

On a traditional data center network we group connected objects by using Layer 2 VLANs, providing us approximately 4000 groups or segments depending on implementation. Within these VLAN groups, most, if not all, communication is default allowed (no policy is enforced). We then attach a single subnet, Layer 3 object, to the VLAN. When traffic moves between VLANs it is also moving between subnets which requires a routed point. This default gateway is where policy is enforced. Many device can act as the default gateway, but they are the most typical policy enforcement point.

On a traditional data center network we group connected objects by using Layer 2 VLANs, providing us approximately 4000 groups or segments depending on implementation. Within these VLAN groups, most, if not all, communication is default allowed (no policy is enforced). We then attach a single subnet, Layer 3 object, to the VLAN. When traffic moves between VLANs it is also moving between subnets which requires a routed point. This default gateway is where policy is enforced. Many device can act as the default gateway, but they are the most typical policy enforcement point.

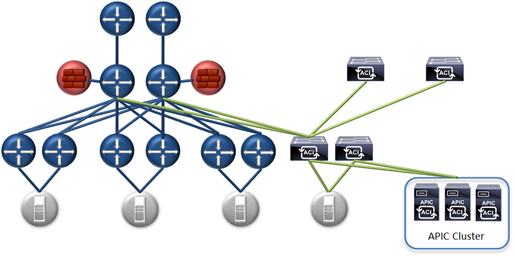

The way traditional networks handle this policy enforcement point is at the Aggregation Layer of a physical or logical 3-tier network design. The diagram below depicts this.

Depending on design, the L3 boundary (default gateway) may be a router, or a L4-7 appliance such as a firewall. This is the policy enforcement point within traditional network designs. For traffic to move between groupings/segments (VLANs) it must traverse this boundary where policy is enforced. It is exactly this point where ACI is inserted as a Policy Automation Engine, integrated with the existing infrastructure. The diagram below shows one example of this.

Depending on design, the L3 boundary (default gateway) may be a router, or a L4-7 appliance such as a firewall. This is the policy enforcement point within traditional network designs. For traffic to move between groupings/segments (VLANs) it must traverse this boundary where policy is enforced. It is exactly this point where ACI is inserted as a Policy Automation Engine, integrated with the existing infrastructure. The diagram below shows one example of this.

In the diagram you’re attaching the minimal production ACI requirements: Controller cluster, 2x spine switches, and 2x leaf switches to the existing network at the aggregation layer (green links depict new links.) From there the only requirement to utilize ACI as the policy automation engine for the entire network is to trunk the VLANs to the ACI leaf, and move the default gateway (the policy enforcement point as shown above.) ACI can now automate policy for the network as a whole.

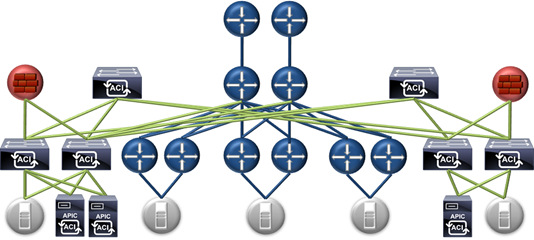

This can be used as a migration strategy, or a permanent solution. There is no requirement to migrate all switches to Nexus 9000 or even Cisco switches up front or over time. A customer can easily maintain a dual-vendor strategy, etc. while utilizing ACI. Many benefits can be found by implementing with Nexus 9000 as needed, but that is always a decision based on the pros and cons seen by a given organization.

The diagram above shows a logical depiction of how ACI would be added to an existing network while automating policy enforcement for the entire network. The diagram below shows the same thing in a different fashion. The diagram below is no different, except that it will help visualize ACI as a service appliance automating policy. The only additional changes are that the firewalls have been re-cabled to ACI leaf switches for traffic optimization, and a second pair of ACI leaf switches has been added for visual symmetry.

In this model ACI is not ‘managing’ the existing switching, it is automating policy network wide. Policy is the most frequent change point, if not the only change point, therefore it is the point that requires automation. The existing infrastructure is in place, configured and working, there is no need to begin managing it in a different fashion, that’s not where agility comes from.

ACI’s Integration with L4-7 service devices requires management of those devices:

This is one of the more interesting points of the way ACI integrates with other systems. With most solutions when you add a control/management system it is in complete control of all devices, and needs them at a default or baseline. ACI operates on a different control model which allows it to integrate and pass instructions to a device without needing to fully manage it. What this means is that ACI can integrate with L4-7 devices already in place, configured, and in use. ACI makes no assumptions of existing configuration, and simply passes commands to be implemented on those devices for new configurations in ACI. Additionally these commands are implemented natively on the device.

What this means is that there is no lock-in at all by integrating ACI with existing devices, or adding new virtual/physical appliances and integrating them with ACI. To put this more succinctly:

- ACI can integrate with existing L4-7 devices such as firewalls, without the need for config change or migration.

- ACI can be removed from a network after integration with no need for config change or migration on L4-7 devices. This is because the configuration is native to the device, and the device is never ‘managed’ by ACI. The device is simply used programmatically while ACI is in place.

Summary:

ACI is a platform for the deployment of applications on the network, not a platform for lock-in. In fact it is designed as a completely open system using standards based protocols, open APIs northbound and southbound, and open 3rd party device integration. It can be used as:

- An automated pool of network resources, similar to the way UCS does with servers.

- A security and compliance engine.

- A policy automation engine as described above.

There is plenty of information available on ACI, take some time to get an idea of what it can do for you.

Really enjoyable, concise post. One thing in particular seemed very interesting:

“What this means is that ACI can integrate with L4-7 devices already in place, configured, and in use. ACI makes no assumptions of existing configuration, and simply passes commands to be implemented on those devices for new configurations in ACI”

Would love to see a follow up post on how this is implemented.

Hi great post, if ACI solution hosted in ISP public cloud, do you consider best connect the PE router with the SPIN ? should the PE router support VXLAN ? can we use single BGP session for multtiple VRF and tenant ?

thanks.