Note: I have made updates to reflect that Virtual Connect is two words, and technical changes to explain another method of network configuration within Virtual Connect that prevents the port blocking described below. Many thanks to the Ken Henault at HP who graciously walked me through the corrections, and beat them into my head until I understood them.

I’m sitting on a flight from Honolulu to Chicago in route home after a trip to Hawaii for customer briefings. I was hoping to be asleep at this point but a comment Ken Henault left on my ‘FlexFabric – Small Step, Right Direction’ post is keeping me awake… that’s actually a lie, I’m awake because I’m cramped into a coach seat for 8 hours while my fiancé, who joined me for a couple of days, enjoys the first class luxuries of my auto upgrade, all the comfort in the world wouldn’t make up for the looks I got when we landed if I was the one up front.

So, being that I’m awake anyway I thought I’d address the comment from the aforementioned post. Before I begin I want to clarify that my last post had nothing to do with UCS, I intentionally left UCS out because it was designed with FCoE in mind from the start so it has native advantages in an FCoE environment. Additionally within UCS you can’t get away from FCoE, if you want Fibre Channel connectivity your using FCoE so it’s not a choice to be made (iSCSI, NFS, and others are supported but to connect to FC devices or storage it’s FCoE.) The blog was intended to state exactly what it did: HP has made a real step into FCoE with FlexFabric but there is still a long way to go. To see the original post click the link (http://www.definethecloud.net/?p=419.)

I’ve got a great deal of respect for both Ken and HP whom he works for. Ken knows his stuff, our views may differ occasionally but he definitely gets the technology. The fact that Ken knows HP blades inside, outside, backwards forwards and has a strong grasp on Cisco’s UCS made his comment even more intriguing to me, because it highlights weak spots in the overall understanding of both UCS and server architecture/design as it pertains to network connectivity.Â

Scope:

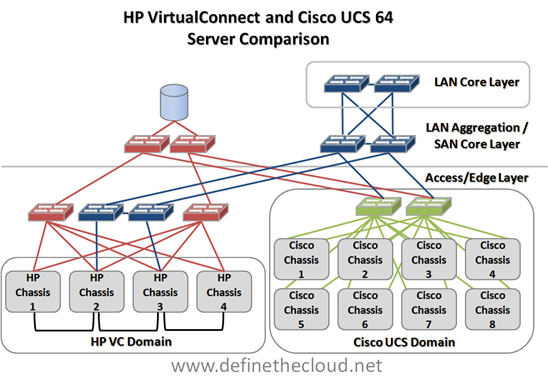

This post will cover the networking comparison of HP C-Class using Virtual Connect (VC) modules and Virtual Connect (VC) management as it compares to the Cisco UCS Blade System. This comparison is the closest ‘apples-to-apples’ comparison that can be done between Cisco UCS and HP C-Class. Additionally I will be comparing the max blades in a single HP VC domain which is 64 (4 chassis x 16 blades) against 64 UCS blades which would require 8 Chassis.

Accuracy and Objectivity:

It is not my intent to use numbers favorable to one vendor or the other. I will be as technically accurate as possible throughout, I welcome all feedback, comments and corrections from both sides of the house.

HP Virtual Connect:

VC is an advanced management system for HP C-Class blades that allows 4 blade chassis to be interconnected and managed/networked as a single system. In order to provide this functionality the LAN/SAN switch modules used must be VC and the chassis must be interconnected by what HP calls a stacking-link. HP does not consider VC Ethernet modules to be switches, but for the purpose of this discussion they will be. I make this decision based on the fact that: They make switching decisions and they are the same hardware as the ProCurve line of blade switches.

Note: this is a networking discussion so while VC has other features they are not discussed here.

Let’s take a graphical view of a 4-chassis VC domain.

In the above diagram we see a single VC domain cabled for LAN and SAN connectivity. You can see that each chassis is independently connected to SAN A and B for Fibre Channel access, but Ethernet traffic can traverse the stacking-links along with the domain management traffic. This allows a reduced number of uplinks to be used from the VC domain to the network for each 4 chassis VC domain. This solution utilizes 13 total links to provide 16 Gbps of FC per chassis (assuming 8GB uplinks) and 20 Gbps of Ethernet for the entire VC domain (with blocking considerations discussed below.) More links could be added to provide additional bandwidth.

In the above diagram we see a single VC domain cabled for LAN and SAN connectivity. You can see that each chassis is independently connected to SAN A and B for Fibre Channel access, but Ethernet traffic can traverse the stacking-links along with the domain management traffic. This allows a reduced number of uplinks to be used from the VC domain to the network for each 4 chassis VC domain. This solution utilizes 13 total links to provide 16 Gbps of FC per chassis (assuming 8GB uplinks) and 20 Gbps of Ethernet for the entire VC domain (with blocking considerations discussed below.) More links could be added to provide additional bandwidth.

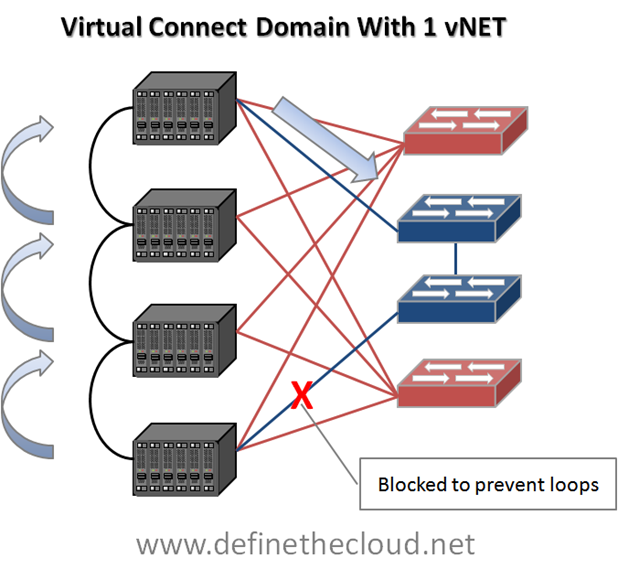

This method of management and port reduction does not come without its drawbacks. In the next graphic I add loop prevention and server to server communication.

The first thing to note in the above diagram is the blocked link. When only a single vNet is configured accross the VC Domain (1-4 chassis) only 1 link or link aggregate group may forward per VLAN. This means that per VC domain there is only one ingress or egress point to the network per VLAN. This is because VC is not ‘stacking’ 4 switches into one distributed switch control plane but instead ‘daisy-chaining’ four independent switches together using an internal loop prevention mechanism. This means that to prevent loops from being caused within the VC domain only one link can be actively used for upstream connectivity per VLAN.

Because of this loop prevention system you will see multiple-hops for frames destined between servers in separate chassis, as well as frames destined upstream in the network. In the diagram I show a worst case scenario for educational purposes where a frame from a server in the lower chassis must hop three times before leaving the VC domain. Proper design and consideration would reduce these hops to two max per VC domain.

**Update**

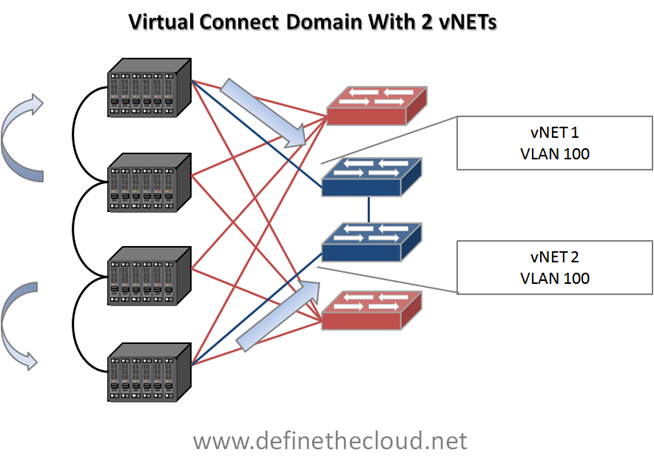

This is only one of the methods available for configuring vNets within a VC domain. The second method will allow both uplinks to be configured using separate vNets which allows each uplink to be utilized even within the same VLANs but segregates that VLAN internally. The following diagram shows this configuration.

In this configuration server NIC pairs will be configured to each use one vNet and NIC teaming software will provide failover. Even though both vNets use the same VLAN the networks remain separate internally which prevents looping, upstream MAC address instability etc. For example a server utilizing only two onboard NICs would have one NIC in vNet1 and one in vNet2. In the event of an uplink failure for vNet1 the NIC in that vNet would have no north/south access but NIC teaming software could be relied upon to force traffic to the NIC in vNet 2.Â

While both methods have advantages and disadvantages this will typically be the preferred method to avoid link blocking and allow better bandwidth utilization. In this configuration the center two chassis will still require an extra one or two hops to send/receive north/south traffic depending on which vNet is being used.

**End Update**

The last thing to note is that any Ethernet cable reduction will also result in lower available bandwidth for upstream/northbound traffic to the network. For instance in the top example above only one link will be usable per VLAN. Assuming 10GE links, that leaves 10G bandwidth upstream for 64 servers. Whether that is acceptable or not depends on the particular I/O profile of the applications. Additional links may need to be added to provide adequate upstream bandwidth. That brings us to our next point:

Calculating bandwidth needs:

Before making a decision on bandwidth requirements it is important to note the actual characteristics of your applications. Some key metrics to help in design are:

- Peak Bandwidth

- Average Bandwidth

- East/West traffic

- North/South Traffic

For instance, using the example above, if all of my server traffic is East/West within a single chassis then the upstream link constraints mentioned are mute points. If the traffic must traverse multiple chassis the stacking-link must be considered. Lastly if traffic must also move between chassis as well as North/South to the network, uplink bandwidth becomes critical. With networks it is common to under-architect and over-engineer, meaning spend less time designing and throw more bandwidth at the problem, this does not provide the best results at the right cost.

Cisco Unified Computing System:

Cisco UCS takes a different approach to providing I/O to the blade chassis. Rather than placing managed switches in the chassis UCS uses a device called an I/O Module or Fabric Extender (IOM/FEX) which does not make switching decisions and instead passes traffic based on an internal pinning mechanism. All switching is handled by the upstream Fabric Interconnects (UCS 6120 or 6140.) Some will say the UCS Fabric Interconnect is ‘not-a-switch’ using the same logic as I did above for HP VC devices the Fabric Interconnect is definitely a switch. In both operational modes the interconnect will make forwarding decisions based on MAC address.

One major architectural difference between UCS and HP, Dell, IBM, Sun blade implementations is that the switching and management components are stripped from the individual chassis and handled in the middle of row by a redundant pair of devices (fabric interconnects.) These devices replace the LAN Access and SAN edge ports that other vendors Blade devices connect to. Another architectural difference is that the UCS system never blocks server links to prevent loops (all links are active from the chassis to the interconnects) and in the default mode, End Host mode it will not block any upstream links to the network core. For more detail on these features see my posts: Why UCS is my ‘A-Game Server Architecture http://www.definethecloud.net/?p=301, and UCS Server Failover http://www.definethecloud.net/?p=359.)Â

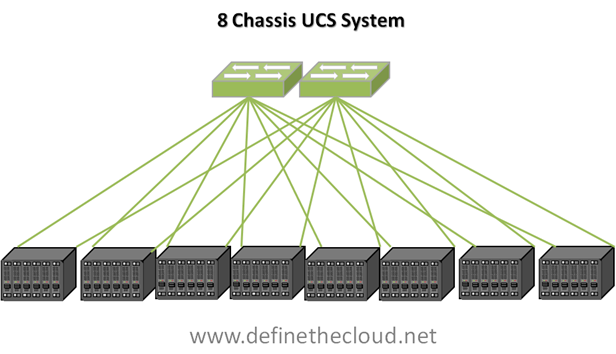

A single UCS implementation can scale to a max 40 Chassis 320 servers using a minimal bandwidth configuration, or 10 chassis 80 servers using max bandwidth depending on requirements. There is also flexibility to mix and match bandwidth needs between chassis etc. Current firmware limits a single implementation to 12 chassis (96 servers) for support and this increases with each major release. Let’s take a look at the 8 chassis 64 server implementation for comparison to an equal HP VC domain.

In the diagram above we see an 8 chassis 64 server implementation utilizing the minimum number of links per chassis to provide redundancy (the same as was done in the HP example above. Here we utilize 16 links for 8 chassis providing 20Gbps of LAN and SAN traffic to each chassis. Because there is no blocking required for loop-prevention all links shown are active. Additionally because the Fabric Interconnects shown here in green are the access/edge switches for this topology all east/west traffic between servers in a single chassis or across chassis is fully accounted for. Depending on bandwidth requirements additional uplinks could be added to each chassis. Lastly there would be no additional management cables required from the interconnects to the chassis as all management is handled on dedicated, prioritized internal VLANs.

In the system above all traffic is aggregated upstream via the two fabric interconnects, this means that accounting for North/South traffic is handled by calculating the bandwidth needs of the entire system and designing the appropriate number of links.

Side by Side Comparison:

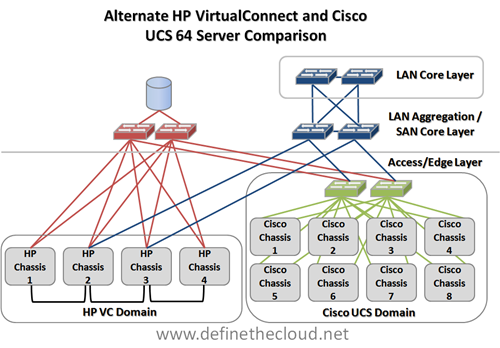

In the diagram we see a maximum server scale VC Domain compared to an 8 chassis UCS domain. The diagram shows both domains connected up to a shared two-tier SAN design (core/edge) and 3 tier network design (Access, Aggregation, Core.) In the UCS domain all access layer connectivity is handled within the system.

In the diagram we see a maximum server scale VC Domain compared to an 8 chassis UCS domain. The diagram shows both domains connected up to a shared two-tier SAN design (core/edge) and 3 tier network design (Access, Aggregation, Core.) In the UCS domain all access layer connectivity is handled within the system.

In the next diagram we look at an alternative connectivity method for the HP VC domain utilizing the switch modules in the HP chassis as the access layer to reduce infrastructure.

In this method we have reduced the switching infrastructure by utilizing the onboard switching of the HP chassis as the access layer. The issue here will be the bandwidth requirements and port costs at the LAN aggregation/SAN core. Depending on application bandwidth requirements additional aggregation/core ports will be required which can be more costly/complex than access connectivity. Additionally this will increase cabling length requirements in order to tie back to the data center aggregation/core layer.Â

Summary:

When comparing UCS to HP blade implementations a base UCS blade implementation is best compared against a single VC domain in order to obtain comparable feature parity. The total port and bandwidth counts from the chassis for a minimum redundant system are:

| Â | HP | Cisco |

| Total uplinks | 13 | 16 |

| Gbps FC | 16 per chassis | N/A |

| Gbps Ethernet | 10 per VLAN per VC Domain/ 20 Total | N/A |

| Consolidated I/O | N/A | 20 per chassis |

| Total Chassis I/O | 21 Gbps for 16 servers | 20 Gbps for 8 servers |

Â

This does not take into account the additional management ports required for the VC domain that will not be required by the UCS implementation. An additional consideration will be scaling beyond 64 servers. With this minimal consideration the Cisco UCS will scale to 40 chassis 320 servers where the HP system scales in blocks of 4 chassis as independent VC domains. While multiple VC domains can be managed by a Virtual Connect Enterprise Manager (VCEM) server the network stacking is done per 4 chassis domain requiring North/South traffic for domain to domain communication.

The other networking consideration in this comparison is that in the default mode all links shown for the UCS implementation will be active. The HP implementation will have one available uplink or port aggregate uplink per VLAN for each VC domain, further restraining bandwidth and/or requiring additional ports.

Joe,

First, let me thank you for taking the time to write this blog and understand Virtual Connect a little better. I have great deal of respect for you as well. As you might expect, I have a slightly different perspective on this comparison. But you did a great job of laying out some of the basic configurations.

You state that UCS is unique in providing a single point of management for multiple enclosures. In the designs compared here Virtual Connect will also have a single point of management for 4 enclosures containing 64 servers. That’s a good span of management for most applications. If a larger span of management is required we can employ Virtual Connect Enterprise Manager, and manage 250 VC Domains, 1000 enclosures containing up to 16,000 servers. So I think it’s fair to say Virtual Connect has a good answer for points of management. Server/Enclosure management is separate from the I/O management with BladeSystem, but that’s because HP has more than one I/O option. I’ll cover the benefits of that a little later.

You also mention that UCS can scale to 40 enclosures per UCS Manager. There are a couple of problems with that assertion. While that might be physically possible, the current firmware has a limitation that is significantly lower than that. I believe it’s currently 14 enclosures. The next issue is that it won’t provide enough North-South bandwidth to support the vast majority of applications. 40 enclosures would use all the ports in a pair of UCS6140s. So this requires all the uplinks come out of the 2 expansion module slots. I believe the best options here would be 6 ports of 10Gb Ethernet and 6 ports of 8Gb Fibre channel in each UCS6140. That would only provide 0.24 Gb of storage bandwidth and 0.375Gb of network traffic to each of the 320 servers.

If you want to discuss what’s a switch, and what’s not a switch, we need to consider the FEX. Cisco would like us to forget about the FEX, and make believe it doesn’t exist. But it does exist, and it does have implications. Here’s a few things to consider about the FEX.

• It uses the exact same Ethernet chip as is used in the UCS61xx

• It’s a point of oversubscription, 8:1 in the example shown

• It’s making decisions on where to send packets

•

In my book anything that uses an Ethernet switching chip to manage oversubscription and make decisions on where to send packets is a switch. Even if it uses a VNtag or some other secret sauce instead of a MAC Address, it’s a switch.

Let’s look at what this means for different traffic flows, starting with North/South. In the redundant network example above, Virtual Connect has a best case of one hop worst case of two hops to get out of the enclosure. With UCS the best and worst case is two hops out. Both have 32:1 oversubscription as shown, with the ability to reduce that by adding uplinks. Pretty even, slight advantage to Virtual Connect.

When it comes to East-West traffic, it’s a completely different story. With UCS all East/West traffic has to take three hops, and go through 8:1 oversubscription in both directions. With Virtual Connect, 16 servers are connected to the same VC module, so it’s a single hop and no oversubscription for latency or bandwidth sensitive East/West traffic within an enclosure. With solutions like Client Based Computing, VDI and Multi-tiered applications, East/West traffic is becoming more common every day. This is a significant advantage for Virtual Connect.

But the HP blade story isn’t just about Virtual Connect. Cisco’s approach to blades is like Henry Ford’s approach to cars. “You can have any color you want, as long as it’s black.†Becomes you can have any network you want, as long as it’s Nexusâ€. You talk about the balance of Architecting vs Engineering a network solution. It’s a lot easier to architect an optimal solution with more tools and options. Let’s take a look at some of the options BladeSystem provides.

Some IT shops still have all Catalyst networks, we have a couple of different Catalyst switches so they can maintain a consistent management framework. Some solutions just require basic, 10Gb connectivity, so we have the ProCurve switch. Some solutions don’t need that much bandwidth, so we have low cost 1:10Gb and ProCurve 1:10Gb options. For really high end needs we also have an Infiniband option to provide 40Gb ultra-low latency connectivity.

Then consider the storage side. With UCS you have one option, FCoE. Well you could add in the options for iSCSI or NFS, but these will be host based. Here again, there are many options available for HP BladeSystem. Our FCoE option, FlexFabric, will officially announce in a few days. Many are aware of that, but few know that it will also support full iSCSI offload, and boot from iSCSI. So now instead of having to use expensive Fibre Channel storage to support the boot device for stateless servers, it can be placed on cost-effective iSCSI storage. If you need more local storage, we have a blade that will hold 6 drives, with more to come. If that’s not enough, we have a SAS switch that will allow direct access to 840 terabytes from a blade enclosure.

Not to forget about traditional Fibre Channel. Fibre Channel isn’t going away anytime soon. For shops that need the extra bandwidth, or don’t want to worry about evolving standards, this is still the way to go.

I almost forgot to mention the number of I/O slots. A half height UCS blade has 2-10Gb CEE uplinks, period. The half height HP blades have 2-10Gb uplinks, plus 2 additional mezzanine slots. Both architectures will support 40Gb at some point in the future, but it’s not here yet. This

I could go on and on about the various options and possibilities available with HP BladeSystem, but I think I’ve made my point. UCS does have some interesting attributes. One user interface (UCS Manager) instead of two (Onboard Administrator & VC Manager). You can also save a few uplink ports when compared to Virtual Connect. But these come with a cost. The hierarchical network design adds oversubscription and latency. The single minded focus on FCoE eliminates other networking choices. The expense of the top of rack UCS6100 forces you to combine multiple enclosures into a single management domain. With Virtual Connect the 4 enclosure scenario above can be split into 4 separate domains to provide less oversubscription, fewer hops to the core, and better isolation. All for the cost of a couple of switch ports.

At the end of the day, there is no one size fits all solution. ( I stole that from your Cloud Rule #27 Joe). Blades require a migration strategy, not a fork lift. (That’s a paraphrase of Cloud Rule #06). HP BladeSystem gives you the widest array of options, so you’re not stuck with a one size fits all solution. HP Blades use the same management tools and interfaces as our industry leading ProLiant servers. This means you don’t have to build a new management infrastructure, and learn new tools when moving from rack-mount to blade servers. The industry is still buying more traditional servers than blades. So many IT shops still have to go through the transition. By making all the same tools and capabilities they know, love and use on their existing platform, HP makes the transition as painless as possible.

Ken,

Another great response and it’s definitely helpful to have both views on the discussion. I’m going to start at the top of your comment and work my way down:

I agree 100% that a single VC domain compared to an 8 chassis UCS implementation both represent a single point of management accomplished in different ways. Both systems can then scale,

– HP to 1000 enclosures using VCEM (a seperate management server to manage the VC domains.

– Cisco to 40 chassis (320 servers) natively, allthough real world bandwidth as you point out, and I stress to customers, will typically be 10-20 chassis.Beyond a single UCS system (Two Interconnects and X Chassis) UCS relies on best of breed software management tools from companies such as BMC and CA to manage multiple UCS systems.

These will also require a seperate server/software/licensing just as VCEM but can provide the same scalability. HP would state the advantage of having an HP tool for HP hardware, Cisco would state the advantage of using ‘best-of-breed’ provisioning and management tool. I say niether statement matters if the product works at the right price with minimal complexity.

When it comes to the FEX it truy isn’t a switch, in fact it’s basically a smart MUX. It doesn’t use the same ASIC as the 6120. 6120 uses a Unified Port Controller (UPC) switching ASIC which handles encoding for 1/10G Ethernet, 1/2/4/8G FC among many other things for 4 ports. The FEX uses an ASIC formerly known as the Redmond which makes no switching decisions and forwards frames based on a dedicated pinning mechanism dictated by the Fabric Interconnect. The device has no internal management and it’s firmware is provided by the Fabric Interconnect. It does not forward based on VN-Tag it only delivers frames to pinned ports, it will tag/untag VN-Tags at ingress/egresss and the upstream Fabric Interconnect makes all forwarding decisions.

As you state the currently available FEX does have an oversubscription of anything from 8:1 in the configs above to 2:1. Oversubscription is built into most modern networks, and is also present in the HP configs described above. For those HP systems to achieve 1:1 they would require 32 active ports per chassis (redundant connections for each blade.)

– In the HP VC examples above the VC domain is oversubscribed at 64:1 for Ethernet with redundancy.

– In the Cisco example the chassis are configured with 8:1 redundant oversubscription. The entire system is configured with 64:1 oversubscription with redundancy.

Both of these are minimal configurations to discuss cabling and both would most likely be designed with additional uplinks in the real world.

The hop count argument is tough, because there is confusion on what’s a hop. Speaking in Layer 3 terms a router is a hop and a switch is not. At Layer 2 a switch is a hop. Because the FEX is not a hop the Fabric Interconnect is the only hop in the system and frames will all be switched there. That’s a great marketing answer but the technical answer is different. Regardless of whether the FEX is a hop or not frames have to traverse it and incur latency, so the overall switching latency is what matters. The FEX operates at about 1.6us and will be traveresed twice for server-to-server communication. Additionally the Fabric Interconnect will be traveresed at aproximately 3.2us for a total of 6.4us. The HP VC module is rated at 3.8us so one hop out is 3.8us while the two hop chassis incur 7.6us befor leaving the VC domain. It may then incur this latency again to get back to another server port depending on forwarding.

The next piece in regards to upstream switching and the Henry Ford reference I’ll take issue with. UCS will manage both blades and rack mounts under the same system by years end, there is no road map for rack mounts (60% of the market) on VC, that’s not ‘so long as it’s black.’ Additionally UCS does not require Nexus and 10G ports in a Catalyst switch will fully support UCS. I’m actually responding to a new data center build out RFP right now with one option being Catalyst as the upstream for both UCS and Nexus 5000. The other option I’m presenting is Nexus 7000 but it’s a customer option dependant on predicted bandwidth growth.

Cisco is also not abanding Fibre Channel and th MDS product line contuinues to grow. UCS utilizies FCoE at the access layer but does not require it upstream. Each customer can make an indepndant decision of whether they choose to expand FCoE past the access layer.

Lastly you begin a discussion of maximum bandwidth and I whole heartedly agree that HP can provide more bandwidth per chassis and blade compared to current Cisco UCS options. That being said UCS provides a sweet spot of bandwidth for the majority of todays physical and virtual environments. Customers looking at blades that require more than 40Gbps per blade or 80Gbps per chassis will need to look beyond UCS. In those cases I typically recommend rack mounts rather than blades do to overall cost and complexity. HP VC, UCS, and blades as a whole all follow my rule of IT: ‘There is no right tool for every job.’ You state the same.

Your last paragraph is again dead on. Cisco does not have the broad server product portfolio of HP, they do not have advanced server power management tools like HP has built over years of experience, etc. Cisco has a long way to go to match every option pound for pound. UCS is still in a growth phase adoption rate and customer demand will dictate additional models of blades, rack mounts, and interconnects. Right now Cisco offers a very compelling server solution that meets the requirements of a large chunk of the server market.

Great comment and feedback, keep it coming!

Joe,

Interesting comments. I’m learning a bit here too.

Calling the FEX a multiplexer doesn’t really help your case. Google MUX, look it up in Wikipedia (but do it quick, because Joe’s probably editing the page). One word that’s frequently used to describe a MUX is switch. Think about it, you can have up to 8 devices trying to talk out one connection at the same time. this means buffering is required. When the traffic is coming in to the server, the signals need to be de-multiplexed. This means it has to switch it to one of the eight down stream ports. While it might not be a traditional Ethernet switch in the sense that it doesn’t learn addresses or have management interfaces, it does add latency and it has issues of buffering, congestion and over-subscription. This means it does need flow control and congestion management if it’s going to support the lossless Ethernet that FCoE requires. That means it is a switch

When I made the comment “you can have any network you want as long as it’s Nexus” I wasn’t referring to the upstream switches, I was referring to the networking within the UCS components. You are right, although it is Nexus like, it isn’t Nexus. So a more accurate statement would be “You can have any networking you like, as long as it’s UCS”. How’s that.

I fully understand that Cisco isn’t abandoning fibre channel, there is no place for native fiber channel within UCS, only at the edge. This and all the other options that HP makes available is why BladeSystem has 56% market share as of last quarter. I might add that this market share has been growing since UCS was introduced.

UCS has the same conundrum as we had with our p-Class blades. The architecture is adaquate for ~80% of the workloads run in the datacenter. But this means you have to do something else to support that other 20%. We were lucky, we also have the best selling traditional servers to fill the gap. Now with c-Class and all the available options we can cover over 99% of the workloads and we still have traditional server to cover the rest. With UCS, Cisco doesn’t have this luxury. That means shops are forced into a second platform for the applications that don’t fit. That means an extra set of tools, processes and platforms.

Over the last few years HP eliminated BILLIONS of dollars in IT costs through a process they called radical standardization. By employing strict discipline to control the number of tools, platforms and applications that ran in the datacenters, HP was able to dramatically cut costs. In this time when everyone’s trying to reduce spending, there is no room for an 80% solution.

Ken,

First let me ensure everyone knows to be following you on Twitter @BladeGuy. Next let me destroy every point you make in your comments (kidding.)

Per our offline discussion I’m definitely questioning my thinking on the nomenclature of the FEX. It has a lot of switch like functionality but doesn’t actually switch. I’m coming to the conclusion that it’s tough to define because it is very unique. The IOM on the UCS (FEX) and Nexus 2000 (FEX) are really fabric extenders. This is in line with the proposed Network Interface Virtualization (NIV) standards and concepts. It extends the fabric of an actual switch providing some of the functionality of that device without adding a management, Spanning-tree, switching or routing point.

Bottom line is it doesn’t make any switching decisions, it provides shared lanes to and from servers based on pinning mechanisms defined by the master device (Nexus 700, 5000, or UCS Fabric Interconnect.) It also provides QoS, DCB, and buffering mechanisms, again all controlled by the master device. It’s truly a director class switch line card separated from the chassis by ‘fabric-links.’

Aside from all of that arguing the switch or no switch piece doesn’t end up adding any value. The questions that matter are:

– Is it, or can it be an individually managed device – No

– Does it participate in FC or Ethernet routing tables – No

– Does it increase the Spanning-tree domain – No

– Does it maintain a MAC (or VN-Tag) table – No

– Do frames have to traverse the device adding latency – Yes about 1.6us

As far as having any network as long as it’s UCS my answer is yes sir, you are correct. UCS is a complete server architecture and any given component is not independent of another. The system requires Fabric Interconnects (UCS 6120 or 6140) for management and switching. From there it provides all management and switching for blade chassis today, and UCS rack mounts by year’s end. The Fabric Interconnects are the top of the server system, and can then be connected to anything.

An HP chassis is just as locked in the difference is interconnect options to solve different problems. These interconnects must still be developed by or for HP C-Class specifically, they also have to be approved by HP for use. For instance a Cisco customer does not have the option to use a Nexus 4000 in the HP chassis even though it’s designed for the chassis and has been available to HP for testing for some time. Any blade chassis provides a certain amount of lock-in.

Another point on the same note is cost, UCS provides the access layer connectivity for 1-40 (with the caveats we’ve beaten to death) in the form of the Fabric Interconnect. This is part of the cost of the UCS blade system and still matches or beats HP blade costs (without VC modules and licensing) from approximately the 17th server on. Basically access cross connects are included in the cost of the system at comparable or better costs than HP C-Class. These interconnects can then operate with any vendor’s system a customer chooses.

You’re right UCS only provides native Fibre Channel at the Fabric Interconnect edge, but HP only provides FCoE from the individual chassis interconnect across the midline offering no cable reduction. Within a UCS system there is no need to provide native Fibre Channel because Native Fibre channel can connect to the Fabric Interconnects and be passed natively across FCoE to the servers without the need for separate cabling. This is done maintaining backwards compatibility with Fibre Channel and offering better performance/throughput due to reduced encoding overhead of FCoE over 8GB FC (8B/10B is used for Fibre Channel where 64b/66b is used for FCoE.)

I definitely agree that UCS is right for 80% of workloads. Of the remaining workloads that can be done on x86 architecture Cisco provides rack mount servers to meet the specialized needs. These rack mounts are designed to be managed and attached to the same UCS infrastructure and that functionality is scheduled to be released this year.

HP has made HUGE advancements and catapulted the server industry forward.

Cisco has

– Analyzed the market shifts towards virtualization and cloud

– Watched the dominant server vendors (HP, IBM, etc.) and assessed what they did well and what could be improved.

– Delivered a product that from day 1 improved on the server architecture as a whole meeting customer challenges and providing a fresh way to handle compute resources.

Cisco’s real business has never been switching, it’s been proper recognition of coming market shifts and excellent product execution into those shifts.

– From routing to routing/switching with the Catalayst launch which has dominated for nearly 10 years.

– From routing and switching to IP Telephony which they hold approximately 50% market share in.

– From R/S and voice to FC and iSCSI networking with MDS which they drove McData to acquisition with.

– Now from networks to servers with a steady and healthy incline of customer base and installed systems.

I expect that HP will continue to incorporate UCS like innovation much as they’ve tried with FlexFabric, and the real competition will be between HP and Cisco while market share slips from the rest.

Joe

Joe,

I love Cisco UCS architecture, but have one burning question. When using UCS6248 and UCS 2208 FEX, how much latency is introduced in server-to-server traffic. Is it going to be 2x 1.6 micro-second (FEX) + 2x 2.0 micro second (UCS6248) ?? A total of 7.2 micro-second ??

Thanks.

JD,

Server to server traffic on the same fabric (A or B) will incur 2x FEX latency and 1x UCS FI latency. In your example this is 5.2us. Traffic between fabrics A to B or vice versa will be dependant on design and or upstream switching.

Joe

This recent blog has some good proof points on how these other options available with BladeSystem can be used to build the most cost-effective solution.

http://h30507.www3.hp.com/t5/Around-the-Storage-Block-Blog/Make-the-smart-virtualization-choice-Sometimes-it-s-FCoE/ba-p/81751

Disclaimer: I am a Cisco employee and a former HP employee. The views expressed here are my own.

Ken says the expense of the UCS6100 “forces you to combine multiple enclosures into a single management domain”.

First let’s talk about how the UCS “forces you” into a single management domain. I was under the impression that customers liked having consolidated management. The single pane of glass provided by Cisco’s UCS is just one of the many benefits UCS provides over HP’s mini-rack approach (http://www.mseanmcgee.com/2010/05/the-mini-rack-approach-to-blade-server-design/). A single UI for hardware, state, and I/O management for up to 112 servers… that doesn’t sound bad to me.

Next, let’s talk about price. The HP Flex-10 module costs $12K (rounding down), and you want two of them ($24K). You’ll also need a pair of HP VC-FC modules. If I choose the least expensive VC-FC module, that still costs $19K for a pair. So with Virtual Connect, you spend $43K in interconnect modules alone for EVERY SINGLE ENCLOSURE. Oh, yeah, you have to buy Onboard Administrators for every enclosure, too, but I’ll let that cost slide even though Cisco embeds that functionality into a single device and a single GUI. The configuration discussed in this blog (4 HP enclosures) would cost $172,000 just for I/O modules.

You only purchase Cisco Fabric Interconnects once. The Cisco UCS Fabric Interconnects are a bargain when compared to Virtual Connect.

Doron,

Thanks for stopping by and the input. Counting cost etc is a bit outside the scope of the networking discussion intedned but it’s an interesting point, and we’ve been off topic anyway. The numbers are definitely quite interesting and I’m working on some comparable real world quotes of the two solutions. Once I’ve finished that I’ll post any pieces I can.

Joe

This is certainly excellent!! Thank you so much and also

continue

this great labor of love.

Hi there all, here every one is sharing these knowledge, thus it’s good to read this blog, and I used to pay a visit this webpage all the time.

WOW just what I was searching for. Came here by searching for secondhand car

I have to thank you for the efforts you’ve put in penning this site.

I’m hoping to check out the same high-grade content from you later on as well.

In truth, your creative writing abilities has motivated me to get my very own site now 😉

I blog quite often and I truly thank you for your content.

The article has really peaked my interest. I am going to take

a note of your blog and keep checking for new information about once

per week. I opted in for your RSS feed as well.

We made a short list with the 3 most common and avoidable reasons for HPE Virtual Connect down time… worth a look… http://www.magicflexsoftware.com/hpe-virtual-connect-3-life-saver-ti

Rediscover the Joy of Hands-On Fun with 3D Puzzles

Your destination for premium 3D wooden puzzles that spark creativity, boost focus, and bring Family and friends together.

Our eco-friendly, handcrafted puzzle kits are perfect for quality time with family and friends—no screens required.

Whether you’re building with your kids or challenging yourself with a detailed model, every piece tells a story.

Visit our store now @ https://pegpuzzleworld.com/

And experience the magic of building something meaningful, one piece at a time.

Best Regards,