Note: I’ve added a couple of corrections below thanks to Stuart Miniman at Wikibon (http://wikibon.org/wiki/v/FCoE_Standards)Â See the comments for more.

I’ve been digging a little more into the HP FlexFabric announcements in order to wrap my head around the benefits and positioning. I’m a big endorser of a single network for all applications, LAN, SAN, IPT, HPC, etc. and FCoE is my tool of choice for that right now. While I don’t see FCoE as the end goal, mainly due to limitations on any network use of SCSI which is the heart of FC, FCoE and iSCSI, I do see FCoE as the way to go for convergence today. FCoE provides a seamless migration path for customers with an investment in Fibre Channel infrastructure and runs alongside other current converged models such as iSCSI, NFS, HTTP, you name it. As such any vendor support for FCoE devices is a step in the right direction and provides options to customers looking to reduce infrastructure and cost.

FCoE is quickly moving beyond the access layer where it has been available for two years now. That being said the access layer (server connections) is where it provides the strongest benefits for infrastructure consolidation, cabling reduction, and reduced power/cooling. A properly designed FCoE architecture provides a large reduction in overall components required for server I/O. Let’s take a look at a very simple example using standalone servers (rack mount or tower.)

In the diagram we see traditional Top-of-Rack (ToR) cabling on the left vs. FCoE ToR cabling on the right. This is for the access layer connections only. The infrastructure and cabling reduction is immediately apparent for server connectivity. 4 switches, 4 cables, 2-4 I/O cards reduced to 2, 2, and 2. This is assuming only 2 networking ports are being used which is not the case in many environments including virtualized servers. For servers connected using multiple 1GE ports the savings is even greater.

In the diagram we see traditional Top-of-Rack (ToR) cabling on the left vs. FCoE ToR cabling on the right. This is for the access layer connections only. The infrastructure and cabling reduction is immediately apparent for server connectivity. 4 switches, 4 cables, 2-4 I/O cards reduced to 2, 2, and 2. This is assuming only 2 networking ports are being used which is not the case in many environments including virtualized servers. For servers connected using multiple 1GE ports the savings is even greater.

Two major vendor options exist for this type of cabling today:

Brocade:

- Brocade 8000 – This is a 1RU ToR CEE/FCoE switch with 24x 10GE fixed ports and 8x 1/2/4/8G fixed FC ports. Supports directly connected FCoE servers.Â

- This can be purchased as an HP OEM product.

- Brocade FCoE 10-24 Blade – This is a blade for the Brocade DCX Fibre Channel chassis with 24x 10GE ports supporting CEE/FCoE. Supports directly connected FCoE servers.

Note: Both Brocade data sheets list support for CEE which is a proprietary pre-standard implementation of DCB which is in the process of being standardized with some parts ratified by the IEEE and some pending. The terms do get used interchangeably so whether this is a typo or an actual implementation will be something to discuss with your Brocade account team during the design phase. Additionally Brocade specifically states use for Tier 3 and ‘some Tier 2’ applications which suggests a lack of confidence in the protocol and may suggest a lack of commitment to support and future products. (This is what I would read from it based on the data sheets and Brocade’s overall positioning on FCoE from the start.)

Cisco:

- Nexus 5000 – There are two versions of the Nexus 5000:

- 1RU 5010 with 20 10GE ports and 1 expansion module slot which can be used to add (6x 1/2/4/8G FC, 6x 10GE, 8x 1/2/4G FC, or 4x 1/2/4G FC and 4x 10GE)

- 2RU 5020 with 40 10GE ports and 2 expansion module slots which can be used to add (6x 1/2/4/8G FC, 6x 10GE, 8x 1/2/4G FC, or 4x 1/2/4G FC and 4x 10GE)

- Both can be purchased as HP OEM products.

- Nexus 7000 – There are two versions of the Nexus 7000 which are both core/aggregation Layer data center switches. The latest announced 32 x 1/10GE line card supports the DCB standards. Along with support for Cisco Fabric path based on pre-ratified TRILL standard.

Note: The Nexus 7000 currently only supports the DCB standard, not FCoE. FCoE support is planned for Q3CY10 and will allow for multi-hop consolidated fabrics.

Taking the noted considerations into account any of the above options will provide the infrastructure reduction shown in the diagram above for stand alone server solutions.

When we move into blade servers the options are different. This is because Blade Chassis have built in I/O components which are typically switches. Let’s look at the options for IBM and Dell then take a look at what HP and FlexFabric bring to the table for HP C-Class systems.

IBM:

- BNT Virtual Fabric 10G Switch Module – This module provides 1/10GE connectivity and will support FCoE within the chassis when paired with the Qlogic switch discussed below.

- Qlogic Virtual Fabric Extension Module – This module provides 6x 8GB FC ports and when paired with the BNT switch above will provide FCoE connectivity to CNA cards in the blades.

- Cisco Nexus 4000 – This module is an DCB switch providing FCoE frame delivery while enforcing DCB standards for proper FCoE handling. This device will need to be connected to an upstream Nexus 5000 for Fibre Channel Forwarder functionality. Using the Nexus 5000 in conjunction with one or more Nexus 4000s provides multi-hop FCoE for blade server deployments.

- IBM 10GE Pass-Through – This acts as a 1-to-1 pass-through for 10GE connectivity to IBM blades. Rather than providing switching functionality this device provides a single 10GE direct link for each blade. Using this device IBM blades can be connected via FCoE to any of the same solutions mentioned under standalone servers.

Note: Versions of the Nexus 4000 also exist for HP and Dell blades but have not been certified by the vendors, currently only IBM supports the device. Additionally the Nexus 4000 is a standards compliant DCB switch without FCF capabilities, this means that it provides the lossless delivery and bandwidth management required for FCoE frames along with FIP snooping for FC security on Ethernet networks, but does not handle functions such as encapsulation and de-encapsulation. This means that the Nexus 4000 can be used with any vendor FCoE forwarder (Nexus or Brocade currently) pending joint support from both companies.

Dell

- Dell 10GE Pass-Through – Like the IBM pass-through the Dell pass-through will allow connectivity from a blade to any of the rack mount solutions listed above.

Both Dell and IBM offer Pass-Through technology which will allow blades to be directly connected as a rack mount server would. IBM additionally offers two other options: using the Qlogic and BNT switches to provide FCoE capability to blades, and using the Nexus 4000 to provide FCoE to blades.Â

Let’s take a look at the HP options for FCoE capability and how they fit into the blade ecosystem.

HP:

- 10GE Pass-Through – HP also offers a 10GE pass-through providing the same functionality as both IBM and Dell.

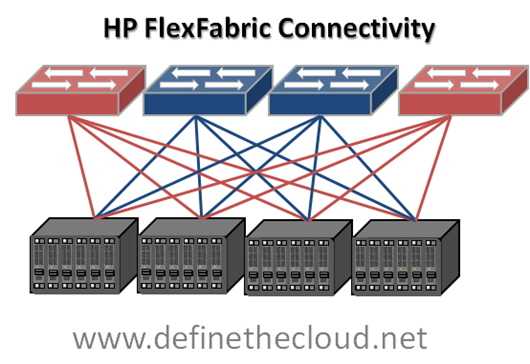

- HP FlexFabric – The FlexFabric switch is a Qlogic FCoE switch OEM’d by HP which provides a configurable combination of FC and 10GE ports upstream and FCoE connectivity across the chassis mid-plane. This solution only requires two switches for redundancy as opposed to four with FC and Ethernet configurations. Additionally this solution works with HP FlexConnect providing 4 logical server ports for each physical 10GE link on a blade, and is part of the VirtualConnect solution which reduces the management overhead of traditional blade systems through software.

On the surface FlexFabric sounds like the way to go with HP blades, and it very well may be, but let’s take a look at what it’s doing for our infrastructure/cable consolidation.

With the FlexFabric solution FCoE exists only within the chassis and is split to native FC and Ethernet moving up to the Access or Aggregation layer switches. This means that while reducing the number of required chassis switch components and blade I/O cards from four to two there has been no reduction in cabling. Additionally HP has no announced roadmap for a multi-hop FCoE device and their current offerings for ToR multi-hop are OEM Cisco or Brocade switches. Because the HP FlexFabric switch is a Qlogic switch this means any FC or FCoE implementation using FlexFabric connected to an existing SAN will be a mixed vendor SAN which can pose challenges with compatibility, feature/firmware disparity, and separate management models.

HP’s announcement to utilize the Emulex OneConnect adapter as the LAN on motherboard (LOM) adapter makes FlexFabric more attractive but the benefits of that LOM would also be recognized using the 10GE Pass-Through connected to a 3rd party FCoE switch, or a native Nexus 4000 in the chassis if HP were to approve and begin to OEM he product.

Summary:

As the title states FlexFabric is definitely a step in the right direction but it’s only a small one. It definitely shows FCoE commitment which is fantastic and should reduce the FCoE FUD flinging. The main limitation is the lack of cable reduction and the overall FCoE portfolio. For customers using, or planning to use VirtualConnect to reduce the management overhead of the traditional blade architecture this is a great solution to reduce chassis infrastructure. For other customers it would be prudent to seriously consider the benefits and drawbacks of the pass-through module connected to one of the HP OEM ToR FCoE switches.

Hi Joe,

A couple of clarifications. You state that IEEE DCB was ratified in 2009, perhaps you are confusing this with T11 FC-BB-5. IEEE DCB is made up of a couple of pieces, 802.1Qbb (Priority Flow Control) passed committee in June 2010, 802.1Qaz which includes Enhanced Transmission Selection and DCBX is still pending committee (but definition is not expected to change). Terminology of CEE and DCB are often used interchangeably. I’ve tried to pull the standards pieces together at http://wikibon.org/wiki/v/FCoE_Standards.

Also, Cisco announced that they plan to ship FCoE support on the Nexus 7000 in Q3’10.

Thanks for pointing out all of the solutions. I always find it interesting that some of the same vendors that are giving guidance to customers to wait another year for FCoE are the same ones that aren’t seeing any sales growth in the FCoE products (can you say self-fulfilling prophecy?).

Stu

Joe,

Another excellent post.

I do agree that VirtualConnect (with all the OEMs) is a step in the right direction.

You bring some very good points to light about the Qlogic (HP branded) FlexFabric switch. I don’t know, but I’m very interested to find out, how Qlogic is going to address the challenges with compatibility, feature/firmware disparity, and separate management models.

I do get your logic. However, I don’t see Dell’s solution to be a “true” VirtualConnect solution because you can’t create vNICs from a physical port like the HP, Cisco, and IBM solutions.

Stu,

Thanks for the corrections and clarification on the Nexus 7000 FCoE road map date. I couldn’t remember if that was a public announcement or not so I defaulted to the safety of CY11 out of laziness 😉 Great post over at Wikibon by the way, thanks for the link, I look forward to cathing up with you at VMworld.

Thomas,

Thanks as always for reading, the Qlogic piece will be interesting to watch play out. While I don’t look at Qlogic as an enterprise FC switch vendor I can say that their switches have played nice for me in the past within IBM chassis. This is typically because they are minimilistic and do little more than pass standard FC frames which makes interoperability a breeze. That being siad they still introduce the potential issues I discussed as well as a SAN switch managed by the server team connected to the Enterprise SAN.

As far as providing virtualized I/O goes, meaning virtual interfaces from a single link, to my knowledge IBM and HP can both provide that with the Emulex OneConnect adapter, and Cisco can do the same with the VIC (formerly Palo.) I’m not familair with a Dell offering, but there game is typically price not innovation and feature set.

Looking forward to catching up with you Thursday to show you another way to do blade and rack mount computing systems.

Joe

Joe,

While your diagrams above look great from an architectural view of the world, things change dramatically when you look at how things actually get deployed. Your diagram shows 8 Ethernet and 8 Fibre Channel connections to support 64 servers with HP BladeSystem. By using the stacking capabilities of Virtual Connect you can reduce the Ethernet uplinks to two. In this model the cables required for HP looks like this:

• 2 Ethernet

• 8 Fibre Channel

• Other links

o 6 Stacking Links

o 8 Management

If we look at the same 64 servers for a Cisco UCS you can get physical connectivity with 2 Ethernet and 2 Fibre Channel connections, but this will not be enough storage bandwidth to do real work. I expect 1Gb of Fibre Channel per server is a good starting point. Eight 8Gb FC connections give the 64 Gb required to give 1Gb to 64 servers. So to build a functional equivalent to the 4 HP enclosure example above, it will look like this:

• 2 Ethernet

• 8 Fibre Channel

• Other links

o 32 Stacking Links

o 4 Management

The number of data uplinks coming into the rack ends up being the same from both products. The only difference is where you plug them in. When you look at the total number of cables it’s a clear advantage for HP. This doesn’t even begin to address the total available bandwidth for each solution.

People often use fuzzy math to compare the two solutions. When hard numbers are used to compare real working configurations the newcomer doesn’t have the claimed advantages over the so called “legacy solutionâ€.

Regards,

Ken

Seriously Ken 1Gbit of sustained FC bandwidth per server. Not for any set of real world applications, and yes I’m sure you can find an exception, but for the typical enterprise applications running on a real environment with normal bursty traffic it will be way lower than that.

You Gartner and the rest of HP might want to look at this post that is based on real data collected from a real customer environment http://www.dailyhypervisor.com/2009/12/21/is-your-blade-ready-for-virtualization-part-2-real-numbers, there are some math and typo errors so I’ll start with the raw data at http://www.dailyhypervisor.com/wp-content/uploads/2009/12/2009-12-21_173139.png

Average Read 442,086 Bytes per sec

Average Write 200,029 Bytes per sec

Average Disk I/O Bytes 603,720 Bytes per sec

Lets be generous and do worst case Read + Write = 642,115 Bytes per sec. Covert to Gbits per sec and we get 0.0047Gbits per second of disk I/O for a VM.

So apparently according to you we have a consolidation ratio of 212:1. At a more realistic consolidation ratio say 20:1 I need less than a Mbit per server at 40:1 1.8Mbits per server and I have 20Gbits of uplink capacity.

Add in the network I/O of 0.000238Gbits per second and it makes no appreciable difference.

So yes I can actually make do with 2 cables and see no performance issues, in real world environments.

Tony,

Thanks for reading and bringing in some examples real world numbers.

Joe

Tony,

I was doing my math a little different. The numbers I was working with assume a peak (not sustained) bandwidth requirement of 412KB/sec for each ESX host. I also like to keep my servers running if one of my redundant fabrics is down, so I double it. I admit this is conservative design, but you’ll have a lot of eggs in one basket when you built this, so you want a good basket. So my actual number is 0.82 GB/Sec, forgive me for rounding up.

Put let’s put that aside, and go with your number. .0047Gb/sec/VM * 25 VM/host * 64 hosts = 7.52Gb/sec. I don’t think that passes the sniff test, but let’s go with that.

What we really need to consider is the starting point for comparison. Before anybody started implementing blade servers, we were using traditional rack mount servers with lots of 1Gb NICs and 8Gb Fibre Channel. Please don’t write this off as ancient technology. Plenty of shops are buying and implementing this design today. They feel comfortable with it, and it works for them. It’s not what either us would recommend, but it is still out there in the market place. So let’s compare with that as the baseline.

The rack mount story looks like this (and I’ve seen it in Cisco’s marketing as well).

64 Servers with 6 Ethernet ports, 2 Fibre Channel Ports and 1 management port each is:

384 Ethernet

128 Fibre Channel

64 management (Ethernet)

576 total cables and switch ports

Compare that with the uplinks required for an equivalent Virtual Connect solution:

2 Ethernet

8 Fibre Channel

8 management

18 cables

576 cables to 18 cables Means that HP Virtual Connect Flex-10 or FlexFabric provides a 97% reduction in cables and uplink ports.

Compare that with the uplinks required for an equivalent UCS solution:

2 Ethernet

2 Fibre Channel

4 management

8 cables

576 cables to 12 cables is a 98.5% reduction

So even if we accept that 8Gb is enough bandwidth for 64 servers running ESX, at the end of the day, UCS might be 1.5% better at cable reduction than c-Class. If you feel you need more SAN bandwidth, the story quickly falls apart.

Regards

Ken

Ken,

Question on UCS management cables…where does the “4” come from. Are you talking the actual fabric management ports? If so, the number would be 2 since the secondary port is not yet active. Or are you talking the IOM (formerly known as FEX) uplinks from the chassis to the fabric interconnects? In which case, the number is 4 x # chassis.

regards,

adam

one math item I got wrong…4 x # chassis…two IOMs so 8 x # chassis for optimal performance.

Joe,

One more thing I’d like to comment on. You stated that “FlexFabric switch is a Qlogic FCoE switch OEM’d by HP”.

I have a couple of issues with that statement. While we might use merchant silicon in many of our networking products, that is very different than OEMing a product. FlexFabric is completely an HP product. Also, Virtual Connect modules are not switches just like UCS61xx is not a switch.

Ken

Ken,

To my knowledge the FlexFabric switch is a Qlogic switch with VirtualConnect software. It is not an HP built switch. This makes sense as HP does not make an FC switch and would have no FC stack from which to build an FCoE stack. I’ll work on digging up some references, and add them to this post when I find them.

Lastly VirtualConnect modules are switches, although they are not marketed as such. The hardware is the same as ProCurve, the software and feature set are different. For instance a glance through the VirtualConnect documentation will show mentions of HP loop prevention techniques, etc. Additionally it makes switching decisions for frames sent to and from servers, which by definition makes it a switch.

Joe

When I see the phrase OEM, a product like the HP/Cisco Catalyst 3120 comes to mind. It looks like a catalyst product, it acts like a Catalyst, it is a Catalyst. By contrast, Qlogic has no products that look, act or feel like a FlexFabric module.

As for the interoperability concerns you wondered about, FlexFabric uses the same exact NPIV technology that Brocade uses in their access gateway and Cisco uses in their MDS and UCS products. With NPIV there is no FC switch interoperability issues.

When it comes to what makes something a switch or not, Cisco has the exact same issue. Cisco doesn’t consider the UCS6100 a switch for the same exact reasons HP doesn’t consider VC to be a switch. Thing like the fact that both products lack of support for key switch functionality and the fact their managed as part of the server infrastructure.

Ken,

You bring up a great point about the 6120 being labled ‘not-a-switch’ I’m addressing that in a blog I’m putting together now, but for clarity the 61X0 Fabric Interconnects are switches regardless of mode. They do make forwarding decisions. In the default/recommended End Host mode they operate differently from a switch as percieved by upstream switches. That is where the confusion comes in.

I consider an Ethernet switch any device that makes a switching decision based on a MAC address. That means both the Cisco UCS 61X0 and HP VirtualConnect modules are switches regardless of fancy software and features.

Joe

Ken,

My math was based on providing the exact same bandwidth, I did not add the complexity or additional cables required for stacking, I also did not include the additional cables required by HP for management because this was solely a networking discussion. Let’s just use your math from the comment above:

‘Your diagram shows 8 Ethernet and 8 Fibre Channel connections to support 64 servers with HP

BladeSystem.’ – 16 Cables

In this model the cables required for HP looks like this:

• 2 Ethernet

• 8 Fibre Channel

• Other links

o 6 Stacking Links

o 8 Management

If we ignore the management cables you added and use the stacking cables which carry data we end up with – 16 Cables 2 Eth, 8 FC, and 6 Stack. Additionally we’ve now reduced our Ethernet bandwidth and moved it into a multi-hop pattern by ‘stacking’ it between chassis.

This post was not intended to get into the nitty gritty of Virtual Connect networking so I intentionally avoided those pieces and showed a 1-for-1 bandwidth match. But again even using your math there is no cable savings for data cables and I did not mention management cables etc.

As far as the UCS comparison, I was not including Cisco UCS in this discussion, just an overview of the available FCoE options for traditional blade manufacturers. I will save my reply to your UCS cabling description for a blog post because it will require network diagrams of both solutions, to begin your numbers are not correct due to the fundamental architecture of the system. I’ll try and post that blog this week.

Thanks for reading Ken and definitely keep me honest, I have no intention and gain nothing from providing false information or FUD, nor do I play the game of stacking the numbers in one sides favor to hedge the outcome.

Great post.

Jon, you make a good point on cable quantity — and that was the main topic of your blog — although I think Ken was also drawing a qualitative difference between the ethernet stacking cables and ethernet cables consuming ToR ports.

Curious: I think from a raw cable count perspective, power cables will outnumber signal cables in any scenario above that didn’t use Pass-Throughs (or 3-phase connections).

Daniel,

Power cables will typically not outnumber the I/O connections on servers, with exceptions existing. All of the blade vendors mentioned above can power a chassis with 4 or fewer cables, and LAN/SAN I/O would require at least 4 for redundnacy.

The ToR port counts is a key point in this discussion and it’s not apparent due to the architectural diffeerences between the Cisco blade methodology and the traditional blade design. This is another piece I will cover in my next post.

Joe

Re: power cables. My (partially tongue-in-cheek) comment about power cables was based on the idea that a fully-converged FCoE network ought to only have 2 cables coming out of every server end-point. That’s your drawing with the green switches above, and it would apply whether the end-point is a single server, or an enclosure of 50 blades.

A single server can get away with 2 power jumper cords, but typically blade enclosures require 4+ power cables to handle a full complement of blades. So by cable count, an enclosure of blades using FCoE would have more power cables than data cables.

I’ll carry the “power cable” thought further: A fully simplified/unified architecture ought to merge the photon carrying cables (data) with the electron carrying cables (power).

Daniel,

Now I see where you’re coming from, yes a converged network chassis (FCoE or other) could potentially get by on 2x 10G connections. This is even more viable in the near future when 40GE begins to ship (not talking any planned roadmap just assuming based on the protocol ratification.) Depending on the blade chassis and power options you’re looking at 2-4 power cables, which could be potentially twice the amount of data cables…. That is somewhat awesome and sad at the same time, great that we’ve reduced cabling requirements so far, but sad to see a chassis with two data cables and 4 power whips.

I like the idea of further simplifying by combining power and Ethernet on the same cable. We already do Power over Ethernet (PoE) and Ethernet over power, why not consolidate it once more with data center class powered Ethernet, just remember that’s not the kind of cable you want to lick in order to ensure it’s active 😉

That’s one to send to the rocket scientests over at HP labs, it would probably take those guys about 15 minutes to sort it out. In fact it’s probably already being worked out vendors just can’t converge too much at one time otherwise they’d have nothing to sell next year 😉

Everyone,

Thanks for the discussion on this one, greaat feedback, let’s keep the conversation moving forward!

Joe

Hi,

I have been currently assigned the project of testing this new product HP Felxfabric, but I have no idea where to start with ?

Has anybody tested the NPIV feature on HP flexfabric. I would like to know the test plans for the same and also what were the results after the test?

Also would like to know more about what setup used?

MS,

A colleague provided me this FlexFabric test plan which may be usefull to you. It’s not finalized or fully edited so don’t mind the minor blemishes. Thanks for reading!

http://definethecloud.net/wp-content/uploads/2010/08/FlexFabricTestPlan.docx

Joe

Is anyone using the HP 10GE Pass-thru for FCoE today?

I’ve mostly heard references to the Nexus w/HP Pass-thru as a good “migration path†to FCoE, but can it be done today? For example, can I take an existing c7000 enclosure and ProLiant BladeSystem G7 servers that either include integrated HP FlexFabric LOMs (for example, some ProLiant BladeSystem G7 servers which are said to use HP Branded Emules OneConnect-based LOMs), or add a OneConnect-based Mezz adapter to their existing ProLiant Blade Servers – and expect FCoE to work properly with Cisco Nexus FCoE-supporting infrastructure (Nexus 5000, Nexus 2232 FEX)?

Is there any good information (architecture, design, whitepapers, etc.) that shows what some IT organizations are doing today to use FCoE w/HP Pass-thru and Nexus infrastructure?

Jason,

There are definitely customers using the pass-through modules along with Cisco Nexus switching to provide FCoE server connections. Conceptually a pass-through module is providing nothing more than a 1-to-1 cable for each blade connection, it operates the same way as directly cabling the blade to the upstream switch. Because of this operational mode you get any/all of the benefits of the upstream switch provided the I/O cards or onboard LOM on the blade are capable of that feature (ie. CNA capable for FCoE.) That will be the biggest catch, ensuring your LOM is a CNA. If it is on the HP blade it will be an Emulex OneConnect adapter in LOM form which will provide CNA capability along with a slew of other benefits (iSCSI boot, offload, FlexNIC capability.) If not you still have the option of using the FCoE software stack available for Linux and Windows to get FCoE to the blade from a traditional 10GE NIC or LOM.

The most important consideration when choosing to go with pass-through is the cabling. You end up with a cable for each server connection will be more total cables than a traditional blade architecture. This has to be carefully considered. Overall the need to move to passthrough to gain appropriate switching functionality is a workaround for missing features/options in the blade architecture itself.

Joe

Hello! ckdddfd interesting ckdddfd site! I’m really like it! Very, very ckdddfd good!

Me deleita mucho absolutamente toda la documentacion que enseñas.

Me motivaria apprender de los que mas de dicho tematica. Lo Aprecio Mucho

Cuenta este blog una seccion de contacto?

Estoy teniendo con sobrados problemas para localizarla, me gustaria mucho mandarle mi

correo electronico. Poseo varias recomendacioness para tu pagina que

posiblemente esres interesado en oir. De todaas formas es un excelednte Webblog y lo visitare con frecuencia.

Me chifla conveiente los temas que posteas aqui, aunque el estilo del internet web resulta ser soso.

Sea como sea, estupendo web-post.

That is a good tip particularly to those fresh to the blogosphere.

Short but very precise info… Appreciate your sharing this one.

A must read article!

De hecho no resulta mal el web-post si bien le falta contenido

y acreditacion. Como sea consiste en un adecuado comienzo.

Un saludo.