As the industry moves deeper and deeper into virtualization, automation, and cloud architectures it forces us as engineers to break free of our traditional silos. For years many of us were able to do quite well being experts in one discipline with little to no knowledge in another. Cloud computing, virtualization and other current technological and business initiatives are forcing us to branch out beyond out traditional knowledge set and understand more of the data center architecture as a whole.

It was this concept that gave me the idea to start a new series on the blog covering the foundation topics of each of the key areas of data center. This will be lessons designed from the ground up to give you a familiarity with a new subject or refresh on an old one. Depending on your background, some, none, or all of these may be useful to you. As we get further through the series I will be looking for experts to post on subjects I’m not as familiar with, WAN and Security are two that come to mind. If you’re interested in writing a beginners lesson in one of those topics, or any other please comment or contact me directly.

Server Systems:

As I’ve said before in previous posts the application is truly the heart of the data center. Applications themselves are the reason we build servers, networks, and storage systems. Applications are the email systems, databases, web content, etc that run our businesses. Applications run within the confines of an operating system which interfaces directly with server hardware and firmware (discussed later) and provides a platform to run the application. Operating systems come in many types, commonly Unix, Linux, and Windows with other variants used for specialized purposes such as mainframe and super computers.

Because the server itself sits more closely than any other hardware to the application understanding the server hardware and functionality is key. Server hardware breaks down into several major components and concepts. For this discussion we will stick with the more common AMD/Intel architectures known as the x86 architecture.

| System board (Mother Board) | All components of a server connect via the system board. The system board itself is a circuit board with specialized connectors for the server subcomponents. The system board provides connectivity between each component of the server. |

| Central Processing Unit (CPU) | The CPU is the workhorse of the server system. The CPU is performing the calculations that allow the operating system and application to run. Whatever work is being done by an application is being processed by the CPU. A CPU is placed in a socket on a system board. Each socket can hold one CPU. |

| Random Access Memory (RAM) | Random Access memory is the place where data that is being used by the operating system and application but not currently being processed is stored. For instance when you hear the term ‘load’ it typically refers to moving data from permanent storage or disk into memory where it can be accessed faster. Memory is electronic and can be accessed very quickly, but it also requires active power to maintain data which is why it is known as being volatile. |

| Disk | Disk is a permanent storage media traditionally comprised of magnetic platters known as disks. Other types of disks exist including Flash disks which provide much greater performance at a higher cost. The key to disk storage is that it is non-volatile and does not require power to maintain data.

Disk can either be internal to the server or external in a separate device. Commonly server disk is consolidated in central storage arrays attached by a specialized network or network protocol. Storage and storage networks will be discussed later in this series. |

| Input/Output (I/O) | Input/Output comprises the methods of getting data in and out of the server. I/O comes in many shapes and sizes but two primary methods used in today’s data centers are Local Area Networks (LAN) using Ethernet as an underlying protocol, and Storage Area Networks (SAN) using Fibre Channel as the underlying protocol (both networks will be discussed later in this series.) These networks attach to the server using I/O ports typically found on expansion cards. |

| System bus | The System bus is the series of paths that connect the CPU to the memory. This will be specific to the CPU vendor. |

| I/O bus | The I/O bus is the path that connects the expansion cards (I/O cards) to the CPU and memory. Several standards exist for these connections allowing multiple vendors to interoperate without issue. The most common bus type for modern servers is the PCI express or PCIe standard which supports greater bandwidth than previous bus types allowing for higher bandwidth networks to be used. |

| Firmware | Firmware is low-level software that is commonly hard-coded onto hardware chips. Firmware runs the hardware device at a low level and interfaces with the BIOS. In most modern server components the firmware can be updated through a process called ‘flashing.’ |

| Basic I/O System (BIOS) | BIOS is a type of firmware stored in a chip on the system board. The BIOS is the first code loaded when a server boots and is primarily responsible for initializing hardware and loading an operating system. |

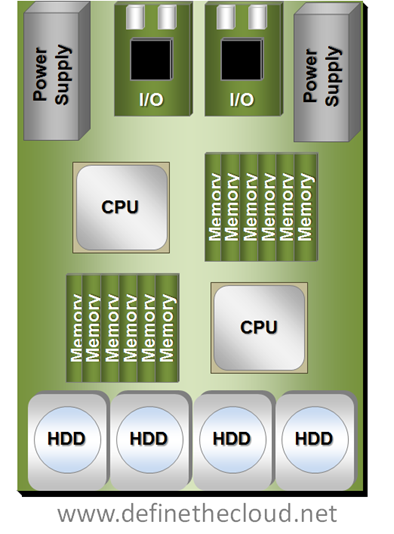

Server

The diagram above shows a two socket server. Starting at the bottom you can see the disks, in this case internal Hard Disk Drives (HDD.) Moving up you can see two sets of memory and CPU followed by the I/O cards and power supplies. The power supplies convert A/C current to appropriate D/C current levels for use in the system. Additionally not shown would be fans to move air through the system for cooling.

The bus systems, which are not shown, would be a series of traces and chips on the system board allowing separate components to communicate.

A Quick Note About Processors:

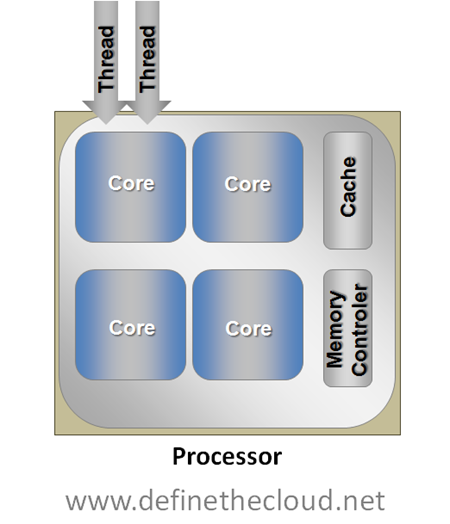

Processors come in many shapes, sizes, and were traditionally rated by speed measures in hertz. Over the last few years a new concept has been added to processors, and that is ‘cores.’ Simply put a core is a CPU placed on a chip beside other cores which each share certain components such as cache and memory controller (both outside the scope of this discussion.) If a processor has 2 cores it will operate as if it was 2 physically independent identical processors and provide the advantages of such.

Another technology has been around for quite some time called hyper threading. A processor can traditionally only process one calculation per cycle (measured in hertz) this is known as a thread. Many of these processes only use a small portion of the processor itself leaving other portions idle. Hyper threading allows a processor to schedule 2 processes in the same cycle as long as they don’t require overlapping portions of the processor. For applications that are able to utilize multiple threads hyper threading will provide an average of approximately 30% increases whereas a second core would double performance.

Hyper threading and multiple cores can be used together as they are not mutually exclusive. For instance in the diagram above if both installed processors were 4 core processors, that would provide 8 total cores, with hyper threading enabled it would provide a total of 16 logical cores.

Not all applications and operating systems can take advantage of multiple processors and cores, therefore it is not always advantageous to have more cores or processors. Proper application sizing and tuning is required to properly match the number of cores to the task at hand.

Server Startup:

When a server is first powered on the BIOS is loaded from EEPROM (Electronically Erasable Programmable Read-Only Memory) located on the system board. While the BIOS is in control it performs a series of Power On Self Tests (POST) ensuring the basic operability of the main system components. From there it detects and initializes key components such as keyboard, video, mouse, etc. Last the BIOS searches for a bootable device. The BIOS searches through available bootable media for a device containing a bootable and valid Master Boot Record (MBR.) It then loads this and allows that code to take over with the load of the operating system.

The order and devices the BIOS searches is configurable in the BIOS settings. Typical boot devices are:

- CD/DVD-ROM

- USB

- Internal Disk

- Internal Flash

- iSCSI SAN

- Fibre Channel SAN

Boot order is very important when there is more than one available boot device, for instance when booting to a CD-ROM to perform recovery of an operating system that is installed. It is also important to note that both iSCSI and Fibre Channel network connected disks are handled by the operating system as if they were internal Small Computer System Interface (SCSI) disks. This becomes very important when configuring non-local boot devices. SCSI as a whole will be covered during this series.

Operating System:

Once the BIOS is done getting things ready and has transferred control to the bootable data in the MBR that bootable data takes over. That is called the operating system (OS.) The OS is the interface between the user/administrator and the server hardware. The OS provides a common platform for various applications to run on and handles the interface between those applications and the hardware. In order to properly interface with hardware components the OS requires drivers for that hardware. Essentially the drivers are an OS level set of software that allow any application running in the OS to properly interface with the firmware running on the hardware.

Applications:

Applications come in many different forms to provide a wide variety of services. Applications are the core of the data center and are typically the most difficult piece to understand. Each application whether commercially available or custom built has unique requirements. Different applications have different considerations for processor, memory, disk, and I/O. These considerations become very important when looking at new architectures because any change in the data center can have significant effect on application performance.

Summary:

The server architecture goes from the I/O inputs through the server hardware to the application stack. Proper understanding of this architecture is vital to application performance and applications are the purpose of the data center. Servers consist of a set of major components, CPU’s to process data, RAM to store data for fast access, I/O devices to get data in and out, and disk to store data in a permanent fashion. This system is put together for the purpose of serving an application.

This post is the first in a series intended to build the foundation of data center. If your starting from scratch they may all be useful, if your familiar in one or two aspects then pick and choose. If this series becomes popular I may do a 202 series as a follow on. If I missed something here, or made a mistake please comment. Also if you’re a subject matter expert in a data center area that would like to contribute a foundation blog in this series please comment or contact me.

Very good post, looking forward to more in the series!

Andy,

Thanks for reading, I’m glad you liked it. I plan on tackling LAN networks next, depending on how long winded I get expect to see that in the next week or two.

Joe

Excellent goods from you, man. I have bear in mind your stuff prior to and you are just too fantastic.

I actually like what you have obtained right here, certainly like what you’re saying and the way wherein you are saying it.

You are making it entertaining and you still care for to stay it

wise. I can’t wait to read far more from you.

That is actually a great site.

Good day! I know this is kinda off topic but I was wondering which blog platform

are you using for this website? I’m getting tired of WordPress because I’ve had problems with hackers and

I’m looking at options for another platform. I would be fantastic if you could point me in the direction of a good platform.

What’s up, the whole thing is going well here and ofcourse every one is sharing data, that’s genuinely good, keep

up writing.

Такой милашка))

http://drdtavana.ir/index.php/component/k2/item/3

У-у-у ты Даешь!Класс!

Usually winnings in free spins are paid to user in form pleasant money, what means, which in a https://clouddrive.nl/component/k2/item/21-daniel-armen they provided with wagering expectations. before settling on bitcoin-sites for receiving bonuses, read the requirements for rollover.