I spent the day today with a customer doing a proof of concept and failover testing demo on a Cisco UCS, VMware and NetApp environment. As I sit on the train heading back to Washington from NYC I thought it might be a good time to put together a technical post on the failover behavior of UCS blades. UCS has some advanced availability features that should be highlighted, it additionally has some areas where failover behavior may not be obvious. In this post I’m going to cover server failover situations within the UCS system, without heading very deep into the connections upstream to the network aggregation layer (mainly because I’m hoping Brad Hedlund at http://bradhedlund.com will cover that soon, hurry up Brad 😉

**Update** Brad has posted his UCS Networking Best Practices Post I was hinting at above. It’s a fantastic video blog in HD, check it out here: http://bradhedlund.com/2010/06/22/cisco-ucs-networking-best-practices/

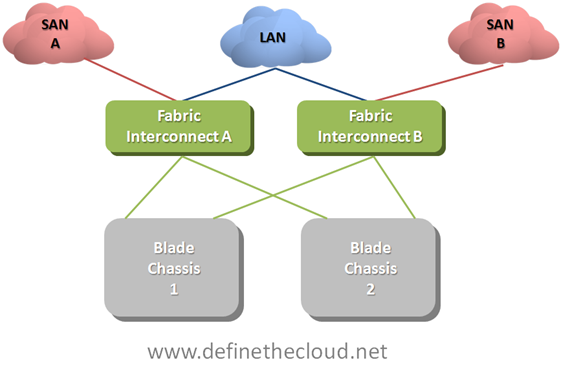

To start this off let’s get up to a baseline level of understanding on how UCS moves server traffic. UCS is comprised of a number of blade chassis and a pair of Fabric Interconnects (FI.) The blade chassis hold the blade servers and the FIs handle all of the LAN and SAN switching as well as chassis/blade management that is typically done using six separate modules in each blade chassis in other implementations.

Note: When running redundant Fabric interconnects you must configure them as a cluster using L1 and L2 cluster links between each FI. These ports carry only cluster heartbeat and high-level system messages no data traffic or Ethernet protocols and therefore I have not included them in the following diagrams.

UCS Network Connectivity

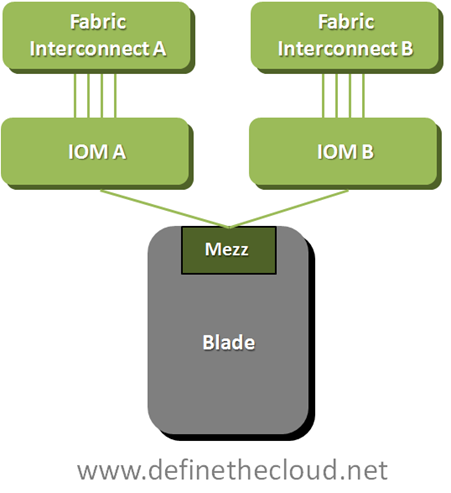

Each individual blade gets connectivity to the network(s) via mezzanine form factor I/O card(s.) Depending on which blade type you select each blade will either have one redundant set of connections to the FIs or two redundant sets. Regardless of the type of I/O card you select you will always have 1x10GE connection to each FI through the blade chassis I/O module (IOM.)

UCS Blade Connectivity

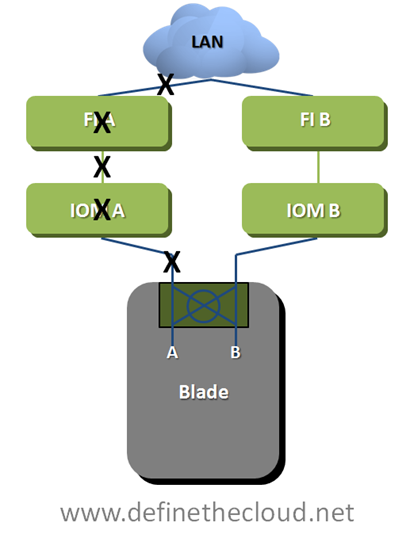

In the diagram your seeing the blade connectivity for a blade with a single mezzanine slot. You can see that the blade is redundantly connected to both Fabric A and Fabric B via 2x10GE links. This connection occurs via the IOM which is not a switch itself and instead acts as a remote device managed by the fabric interconnect. What this means is that all forwarding decisions are handled by the FIs and frames are consistently scheduled within the system regardless of source and or destination. The total switching latency of the UCS system is approximately equal to a top-of-rack switch or blade form factor LAN switch within other blade products. Because the IOM is not making switching decisions it will need another method to move 8 internal mid-plane ports traffic upstream using it’s 4 available uplinks. the method it uses is static pinning. This method provides a very elegant switching behavior with extremely predictable failover scenarios. Let’s first look at the pinning later what this means for the UCS network failures.

In the diagram your seeing the blade connectivity for a blade with a single mezzanine slot. You can see that the blade is redundantly connected to both Fabric A and Fabric B via 2x10GE links. This connection occurs via the IOM which is not a switch itself and instead acts as a remote device managed by the fabric interconnect. What this means is that all forwarding decisions are handled by the FIs and frames are consistently scheduled within the system regardless of source and or destination. The total switching latency of the UCS system is approximately equal to a top-of-rack switch or blade form factor LAN switch within other blade products. Because the IOM is not making switching decisions it will need another method to move 8 internal mid-plane ports traffic upstream using it’s 4 available uplinks. the method it uses is static pinning. This method provides a very elegant switching behavior with extremely predictable failover scenarios. Let’s first look at the pinning later what this means for the UCS network failures.

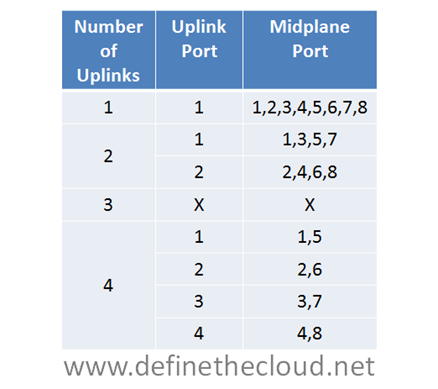

The chart above shows the static pinning mechanism used within UCS. Given the configured number of uplinks from IOM to FI you will know exactly which uplink port a particular mid-plane port is using. Each half-width blade attaches to a single mid-plane port and each full width blade attaches to two. In the diagram the use of three ports does not have a pinning mechanism because this is not supported. If three links are used the 2 port method will define how uplinks are utilized. This is because eight devices cannot be evenly load-balanced across three links.

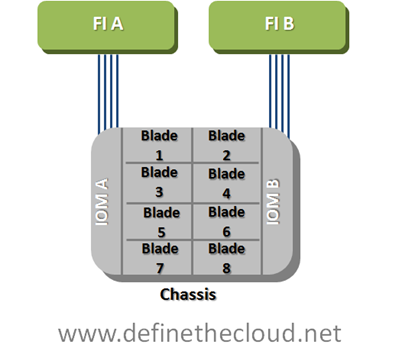

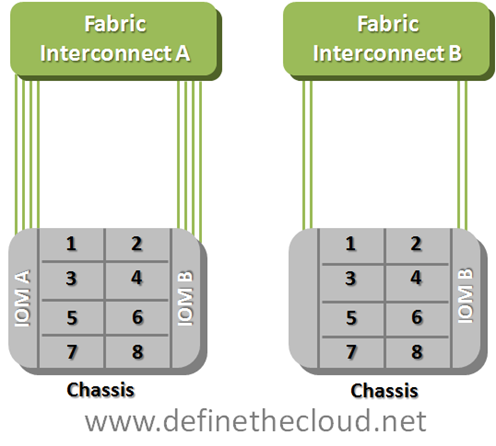

IOM Connectivity

The example above shows the numbering of mid-plane ports. If you were using half width blades their numbering would match. When using full-width blades each blade has access to a pair of mid-plane ports (1-2, 3-4, 5-6, 7-8.)In the example above blade three would utilize mid-plane port three in the left example and one in the second based on the static pinning in the chart.

So now let’s discuss how failover happens, starting at the operating system. We have two pieces of failover to discuss, NIC teaming, and SAN multi-pathing. In order to understand that we need a simple logical connectivity view of how a UCS blade see’s the world.

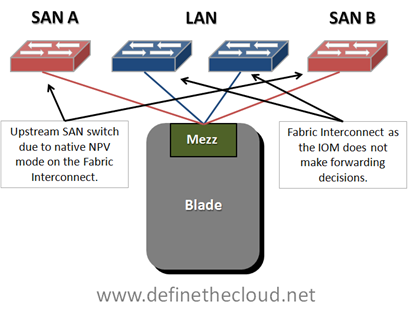

UCS Logical Connectivity

In order to simplify your thinking when working with blade systems reduce your logical diagram to the key components, do this by removing the blade chassis itself from the picture. Remember that a blade is nothing more than a server connected to a set of switches, the only difference is that the first hop link is on the mid-plane of the chassis rather than a cable. The diagram above shows that a UCS blade is logically cabled directly to redundant Storage Area Network (SAN) switches for Fibre Channel (FC) and to the FI for Ethernet. Out of personal preference I leave the FIs out of the SAN side of the diagram because they operate in N_Port Virtualizer (NPV) mode which means forwarding decisions are handled by the upstream NPiV standard compliant SAN switch.

Starting at the Operating System (OS) we will work up the network stack to the FIs to discuss failover. We will be assuming FCoE is being used, if you are not using FCoE ignore the FC piece of the discussion as the Ethernet will remain the same.

SAN Multi-Pathing:

SAN multi-pathing is the way we obtain redundancy in FC, FCoE, and iSCSI networks. It is used to provide the OS with two separate paths to the same logical disk. This allows the server to access the data in the event of a failure and in some cases load-balance traffic across two paths to the same disk. Multi-pathing comes in two general flavors: active/active, or active passive. Active/active load balances and has the potential to use the full bandwidth of all available paths. Active/Passive uses one link as a primary and reserves the others for failover. Typically the deciding factor is cost vs. performance.

Multi-pathing is handled by software residing in the OS usually provided by the storage vendor. The software will monitor the entire path to the disk ensuring data can be written and/or read from the disk via that path. Any failure in the path will cause a multi-pathing failover.

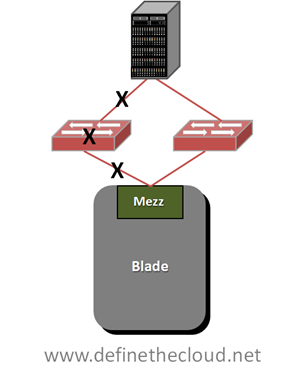

Multi-Pathing Failure Detection

Any of the failures designated by the X’s in the diagram above will trigger failover, this also includes failure of the storage controller itself which are typically redundant in an enterprise class array. SAN multi-pathing is an end-to-end failure detection system. This is much easier to implement in SAN as there is one constant target as opposed to a LAN where data may be sent to several different targets across the LAN and WAN. Within UCS SAN multi-pathing does not change from the system used for standalone servers. Each blade is redundantly connected and any path failure will trigger a failover.

NIC-Teaming:

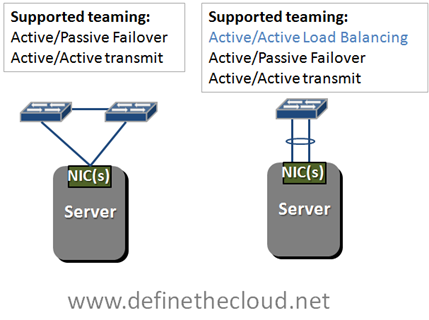

NIC teaming is handled in one of three general ways: active/active load-balancing, active/passive failover, or active/active transmit with active/passive receive. The teaming type you use is dependant on the network configuration.

Supported teaming Configurations

In the diagram above we see two network configurations, one with a server dual connected to two switches, and a second with a server dual connected to a single switch using a bonded link. Bonded links act as a single logical link with the redundancy of the physical links within. Active/Active load-balancing is only supported using a bonded link due to MAC address forwarding decisions of the upstream switch. In order to load balance an active/active team will share a logical MAC address, this will cause instability upstream and lost packets if the upstream switches don’t see both links as a single logical link. This bonding is typically done using the Link Aggregation Control Protocol (LACP) standard.

If you glance back up at the UCS logical connectivity diagram you’ll see that UCS blades are connected in the method on the left of the teaming diagram. This means that our options for NIC teaming are Active/Passive failover and Active Active transmit only. This is assuming a bare metal OS such as Windows or Linux installed directly on the hardware, when using virtualized environments such as VMware all links can be actively used for transmit and receive because there is another layer of switching occurring in the hypervisor.Â

I typically get feedback that the lack of active/active NIC teaming on UCS bare metal blades is a limitation. In reality this is not the case. Remember Active/Active NIC teaming was traditionally used on 1GE networks to provide greater than 1GE of bandwidth. This was limited to a max of 8 aggregated links for a total of 8GE of bandwidth. A single UCS link at 10GE provides 20% more bandwidth than an 8 port active/active team.

NIC teaming like SAN multi-pathing relies on software in the OS, but unlike SAN multi-pathing it typically only detects link failures and in some cases loss of a gateway. Due to the nature of the UCS system NIC teaming in UCS will detect failures of the mid-plane path, the IOM, the utilized link from the IOM to the Fabric Interconnect or the FI itself. This is because the IOM is a linecard of the FI and the blade is logically connected directly to the FI.

UCS Hardware Failover:

UCS has a unique feature on several of the available mezzanine cards to provide hardware failure detection and failover on the card itself. Basically some of the mezzanine cards have a mini-switch built in with the ability to fail path A to path B or vice versa. This provides additional failure functionality and improved bandwidth/failure management. This feature is available on Generation I Converged network Adapters (CNA) and the Virtual Interface Card (VIC) and is currently only available in UCS blades.

UCS Hardware Failover

UCS Hardware failover will provide greater failure visibility than traditional NIC teaming due to advanced intelligence built into the FI as well as the overall architecture of the system. In the diagram above HW failover detects: mid-plane path, IOM and IOM uplink failures as link failures due to the architecture. Additionally if the FI loses it’s upstream network connectivity to the LAN it will signal a failure to the mezzanine card triggering failure. In the diagram above any failure at a point designated by an X will trigger the mezzanine card to divert Ethernet traffic to the B path. UCS hardware failover applies only to Ethernet traffic as SAN networks are built as redundant independent networks and would not support this failover method.

Using UCS hardware failover provides two key advantages over other architectures:

- Allows redundancy for NIC ports in separate subnets/VLANs which NIC teaming cannot do.

- Provides the ability for network teams to define the failure capabilities and primary path for servers alleviating misconfigurations caused by improper NIC teaming settings.

- IOM Link Failure:

The next piece of UCS server failover involves the I/O modules themselves. Each I/O module has a maximum of four 10GE uplinks providing 8x10GE mid-plane connections to the blades at an oversubscription of 1:1 to 8:1 depending on configuration. As stated above UCS uses a static non-configurable pinning mechanism to assign a mid-plane port to a specific uplink from the IOM to the FI. Using this pinning system allows the IOM to operate as an extension of the FI without the need for Spanning Tree Protocol (STP) within the UCS system. Additionally this system provides a very clear network design for designing oversubscription in both nominal and failure situations.

For the discussion of IOM failover we will use an example of a max configuration of 8 half-width blades and 4 uplinks on each redundant IOM.

Fully Configured 8 Blade UCS Chassis

In this diagram each blade is currently redundantly connected via 2x10GE links. One link through each IOM to each FI. Both IOMs and FIs operate in an active/active fashion from a switching perspective so each blade in this scenario has a potential bandwidth of 20GE depending on the operating system configuration. The overall blade chassis is configured with 2:1 oversubscription in this diagram as each IOM is using its max of 4x10GE uplinks while providing its max of 8x10GE mid-plane links for the 8 blades. If each blade were to attempt to push a sustained 20GE of throughput at the same time (very unlikely scenario) it would receive only 10GE because of this oversubscription. The bandwidth can be finely tuned to ensure proper performance in congestion scenarios such as this one using Quality of Service (QoS) and Enhanced Transmission Selection (ETS) within the UCS system.

In this diagram each blade is currently redundantly connected via 2x10GE links. One link through each IOM to each FI. Both IOMs and FIs operate in an active/active fashion from a switching perspective so each blade in this scenario has a potential bandwidth of 20GE depending on the operating system configuration. The overall blade chassis is configured with 2:1 oversubscription in this diagram as each IOM is using its max of 4x10GE uplinks while providing its max of 8x10GE mid-plane links for the 8 blades. If each blade were to attempt to push a sustained 20GE of throughput at the same time (very unlikely scenario) it would receive only 10GE because of this oversubscription. The bandwidth can be finely tuned to ensure proper performance in congestion scenarios such as this one using Quality of Service (QoS) and Enhanced Transmission Selection (ETS) within the UCS system.

In the event that a link fails between the IOM and the FI the servers pinned to that link will no longer have a path to that FI. The blade will still have a path to the redundant FI and will rely on SAN multi-pathing, NIC teaming and or UCS hardware failover to detect the failure and divert traffic to the active link.

For example if link one on IOM A fails blades one and five would lose connectivity through Fabric A and any traffic using that path would fail to link one on Fabric B ensuring the blade was still able to send and receive data. When link one on IOM A was repaired or replaced data traffic would immediately be able to start using the A path again.

IOM A will not automatically divert traffic from Blade one and five to an operational link, nor is this possible through a manual process. The reason for this is that diverting blade one and fives traffic to available links would further oversubscribe those links and degrade servers that should be unaffected by the failure of link one. In a real world data center a failed link will be quickly replaced and the only servers that will have been affected are blade one and five.Â

In the event that the link cannot be repaired quickly there is a manual process called re-acknowledgement which an administrator can perform. This process will adjust the pinning of IOM to FI links based on the number of active links using the same static pinning referenced above. In the above example servers would be re-pinned based on two active ports because three port configurations are not supported.Â

Overall this failure method and static pinning mechanism provides very predictable bandwidth management as well as limiting the scope of impact for link failures.

Summary:

The UCS system architecture is uniquely designed to minimize management points and maximize link utilization by removing dependence on STP internally. Because of its unique design network failure scenarios must be clearly understood in order to maximize the benefits provided by UCS. The advanced failure management tools within UCS will provide for increased application uptime and application throughput in failure scenarios if properly designed.

Joe,

Great post!

I will be posting my “Cisco UCS Networking Best Practices” presentation in a few weeks.

Thanks for the nudge 🙂

Cheers,

Brad

Brad,

Thanks for stopping by. I’m glad to hear you’ll be posting the UCS Networking Best Practices, I wouldn’t be able to do it justice and it’s an important concept.

Joe

Oh you mean NETWORK failure but I thought this was about SERVER failure, as in how a workload can move from a failed server to a good server… semantics, eh? 🙂

Damn you Steve, you’ve highlighted the fact that my mind has moved from servers and applications to networks. I need to get back to my roots 😉 I should have been more clear about the specific failover… Actually maybe that will be my next post, using Service profiles to decrease recovery time and increase DR capabilities. Thanks for stopping by the blog!

“Overall this failure method and static pinning mechanism provides very predictable bandwidth management as well as limiting the scope of impact for link failures.”

Which could be so-o-o-o easily avoided if Cisco just bothered to implement FEX Port Channel (port channel between Fabric Interconnect and IO Module). Doesn’t seem very technically challenging, so why is it not there yet?

(howling to the moon) Why, Cisco, why?

Port-channels from the IOM to the FI could be argued either way. If you were to port-channel the links between the IOM and the FI you’d recieve additional fault-tolerance for the links but you’d degrade performance for all 8 blades for a single link failure by further oversubscribing bandwidth. Leaving them as single links forces failover to the B fabric without effecting oversubscription.

Overall I think it should be a customer choice rather than hardware dictated as it is now.

“Leaving them as single links forces failover to the B fabric without effecting oversubscription.”

On _this side_. Other side (side B, whatever) will be hit by oversubscription (referring to Cisco-proposed “servers 1-4 go to A, 5-8 go to B”).

Proper implementation should allow active-active NIC-teaming for all 8 blades, therefore failure of single link on either IOM would just degrade 12.5% percent of overall bandwidth, evenly for all servers. Meaning, no more 10Gbps per server, just 9Gbps. Rather negligible.

I’m not sure I agree with that. Active/Active NIC teaming is overkill at 10GE. With the current implementation a worst case scenario failure with 4 uplinks provides 5Gbps sustained to the 2 blades with a failed link and 0 performance hit on the other 6 blades. This can then be tuned using QoS and ETS to prioritize critical traffic. It’s really a question of whether you want all blades to be slightly effected or a select set to be moderately effrected, I’m of the opinion of limiting the failure effect the way UCS does today. I would still agree that the option to port-channel the links should be implemented but I would recommend against using that option.

Fex port channel with dual-connected servers might have one extra benefit – traffic optimization.

Take Cisco-recommended approach (1-4 to A, 5-8 to B). More and more traffic in DCs is east-to-west (VMotion, clusters etc). So if you have app on server 1 and 8, traffic would be:

server 1 — IOM A — FI A — some upper switch, unknown latency — FI B — IOM B — server 8

With fex port channel and active/active NIC teaming all servers could speak over a single hop (either FI A or B).

Agree with “Cisco, give us a choice, dammit!”

(and “allow local switching on FEX!” 🙂

Do you not see the limitation with Factory Defined and non-configurable Pinning of the Server ports. Sure, it offers up predictable failover scenarios, however, the decision of how to to use these ports should be in the mind of the DC Designer/Architect, not the vendor.

Further, the requirement manually re-ack a failed link is a severe limitation. One could just as easily state that there is no automated fault tolerance between the FEX and and FI. This is what I have proven with my testing and it could possibly limit the offerings that can be applied to the product.

Having the ability to establish a 4 port bundle then define QoS policies would achieve predicable behavior while increasing availability. I essence, greater control of failure scenarios and associated recovery actions needs to be made available. The underlying NXOS is capable, why has it not been implemented?

Further, it appears one has not considered the impact to a server in the event one FI is down and one like to the other FI is down. This would result in total loss of connectivity for 25% of the servers.

In all, I think UCS is a great product and the concept of taking the fabric out of the chassis is one of the most truly innovative moves by Cisco in years. I hope to see these features added to future releases

Anonymous,

Of course it’s your option but I definitely prefer a name (even first) and some disclosure as to the company you work for along with comments especially on specific product posts.

That being said I definitely agree with you that it should be the architect/designers option how failover is handled and port-channels from the IOMs to the FI should be implemented. I also assume we will see this in the near future. As you said NXOS is capable and we can assume the IOM hardware is also as the Nexus 2000 was based on it.

As for the failure scenario you describe in the second to last paragraph in which the same link breaks between both IOM/FI that is a stretch of a scenario. The likelihood of that happening before an admin replaces the cable is very slim. If it were to occur you are correct you would lose connectivity to a set of servers depending on chassis population and number of links.

Overall I agree UCS is a great product and it continues to gain features and functionality with each release. Hopefully some of these make it in the near future.

Thanks for the comment and for reading.

@Drunken Pole, I definitely agree with that if you were going to follow the blades 1-4 > A and Blades 5-8 > B guideline you mentioned. I never recommend that guideline and instead recommend load-balancing primary traffic path based on server-to-server (east/west) traffic using templates.

Blindly load-balacing workloads based on slot number within UCS would be a very bad thing due to the traffic pattern you mention.

I definitely disagree with local-switching on FEX, that puts the requirement for STP back in the system and removes the consistent latency and policy enforcement, not good. Additionally as fast as the FI and IOM switch overall the performance gain would be minimal. Total switching latency blade-to-blade in UCS is comparable to a standard ToR switch.

Joe

Drunken Pole-

There is no such: “Cisco-recommended approach (1-4 to A, 5-8 to B)”.

Cisco does not have a blanket one-size-fits-all recommendation for all implementations as you are suggesting.

Cisco UCS gives the architect the flexibility to define server vNIC placement application by application, profile by profile, based on the individual needs of the workloads.

Cheers,

Brad

Well it was a very informative one.

But still i have a confusion

How many 10 Gig uplink FCOE ports are available on mezanine card .

If it is two and connected to two IOM.

How come this meznine card can provide 20Gig when only one IOM module can be used at time.If we can use the 2nd IOM module how ?

According to my understanding it can provide 10 Gig only through One IOM

The other 10 Gig to the second IOM will serve as a backup

Nav,

Within UCS both IOMs are active and transmitting data regardless of hardware gen. On gen 1 hardware the VIC has two active 10GE paths. With Gen 2 hardware each VIC can have up to 4 10GE paths in each direction for a total of 8.

It is a common misperception that the B fabrioc is for failover only. This is not true, both paths actively switch data. OS/app configuration will be the determining factor of how the paths are used.

Joe

Robert Freeman’s protect photo has not been peannld. They trapped with all the wedding ring in a resort on the British trip, built a makeshift track record, as well as shot these people from the dark clothes these were wearing off-stage at that time. Photo throughout July 1969, merely in to Beatlemania in support of a few months just before their particular Ough.Ersus. cutting-edge, this kind of deal with captures them of what you are able to take into account their own very last stage regarding innocence.

( 2012.03.9 09:40 ) : I’ve been browsing online more than 3 hours today, yet I never found any interesting article like yours. It is pretty worth enough for me. Personally, if all web owners and bloggers made good content as you did, the web will be a lot more useful than ever before.

Moi j’utilise mon Color Tattoo en teinte Edgy Emerald pour faire mon double liner =D hihi Mais j’en fais rarement car c’est long si on s’applique vraiment pour faire de beaux traits bien proportionnés et parallèles.J’testerais bien la technique de la tear drop (avec le doré de la Vice Palette !!!).Bisous choupette <3

Hello,I had a dream with a snake. In was yellow mid to large size and it was on a ceiling. Upside down. Snake was peacefull and easy moving. No attaks, no bites. I was not affraid in the dream… I was just standing in that empty room, looking at the snake… Maybe I was wondering why upside down… Any symbolic in this?Thanks,Gorlak

Definitely believe that which you said. Your favorite reason seemed

to be on the web the easiest thing to be aware of. I say to you, I definitely get

irked while people think about worries that they just do not know

about. You managed to hit the nail upon the top and defined out the whole thing

without having side-effects , people can take a signal.

Will likely be back to get more. Thanks

Please let me know if you’re looking for a article author for your site.

You have some really good posts and I believe I would be a good asset.

If you ever want to take some of the load off, I’d absolutely love to write some articles for your blog in exchange for a link back to mine.

Please send me an e-mail if interested. Kudos!

Excellent blog you have got here.. It’s difficult to find high-quality writing like

yours these days. I truly appreciate people like you!

Take care!!